Archives: 2010 2011 2012 2013 2014 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025

Using cryptsetup / LUKS2 on SSHFS images

Occasionally, I need to open remote LUKS2 images (i.e., files) that I access via SSHFS. This used to work just fine: mount an sshfs, run cryptsetup luksOpen and access the underlying filesystem. However, a recent cryptsetup upgrade introduced (or changed?) its locking mechanism. Now, before opening an image file, it tries to aqcuire a read lock, which will fail with ENOSYS (Function not implemented) on sshfs mountpoints. This, in turn, causes cryptsetup to report "Failed to acquire read lock on device" and "Device ... is not a valid LUKS device.".

There doesn't seem to be a simple way of disabling this (admittedly, in 99% of cases desirable) feature, so for now I'm working around it by just having flock always return success, thanks to the magic of LD_PRELOAD and a flock stub:

#include <sys/file.h>

int flock(int fd, int operation)

{

return 0;

}

Compile as follows:

> ${CC} -O2 -Wall -fPIC -c -o ignoreflock.o ignoreflock.c

> ${CC} -fPIC -O2 -Wall -shared -Wl,-soname,ignoreflock.so.0 -o ignoreflock.so.0 ignoreflock.o -ldl

And then call LD_PRELOAD=..../ignoreflock.so.0 cryptsetup luksOpen ...

(or sudo env LD_PRELOAD=..../ignoreflock.so.0 cryptsetup luksOpen ...).

ignoreflock provides a handy stub, Makefile and wrapper script for this.

Stop IDs in EFA APIs

EFA-based local transit APIs have two kinds of stop identifiers: numbers such

as 20009289 and codes such as de:05113:9289.

travelynx needs a single type of ID, and this post is

meant mostly for myself to find out which ID is most useful. The numeric one

would of course be ideal, as travelynx already uses numeric IDs in its stations

table.

Numbers

DM_REQUEST: Available as stopID in each departureList entry.

STOPSEQCOORD_REQUEST: Available as parent.properties.stopId in each locationSequence entry.

STOPFINDER_REQUEST: Available as stateless and ref.id.

COORD_REQUEST: missing.

Codes

DM_REQUEST: missing.

STOPSEQCOORD_REQUEST: Available as parent.id in each locationSequence entry.

Outside of Germany, the format changes, e.g. placeID:27006983:1 for a border

marker (not an actual stop) and NL:S:vl for Venlo Bf.

STOPFINDER_REQUEST: Available as ref.gid.

COORD_REQUEST: Available as id and properties.STOP_GLOBAL_ID in each locations entry.

API Input

DM_REQUEST: Accepts stop names, numbers, and codesSTOPSEQCOORD_REQUEST: Accepts stop numbers and codes

Examples

- LinzAG Ebelsberg Bahnhof: 60500470 / at:44:41121

- LinzAG Linz/Donau Hillerstraße: 60500450 / at:44:41171

- NWL Essen Hbf: 20009289 / de:05113:9289

- NWL Münster Hbf: 24041000 / de:05515:41000

- NWL Osnabrück Hbf: 28218059 / de:03404:71612

- VRR Essen Hbf: 20009289 / de:05113:9289

- VRR Nettetal Kaldenkirchen Bf: 20023754 / de:05166:23754

- VRR Venlo Bf: 21009676 / NL:S:vl

Conclusion

Looks like numeric stop IDs are sufficient.

They're missing from the COORD_REQUEST endpoint, but that's not a dealbreaker -- all database-related operations work with DM_REQUEST and STOPSEQCOORD_REQUEST data.

Also, there seems to be no universal mapping between the two types of stop IDs.

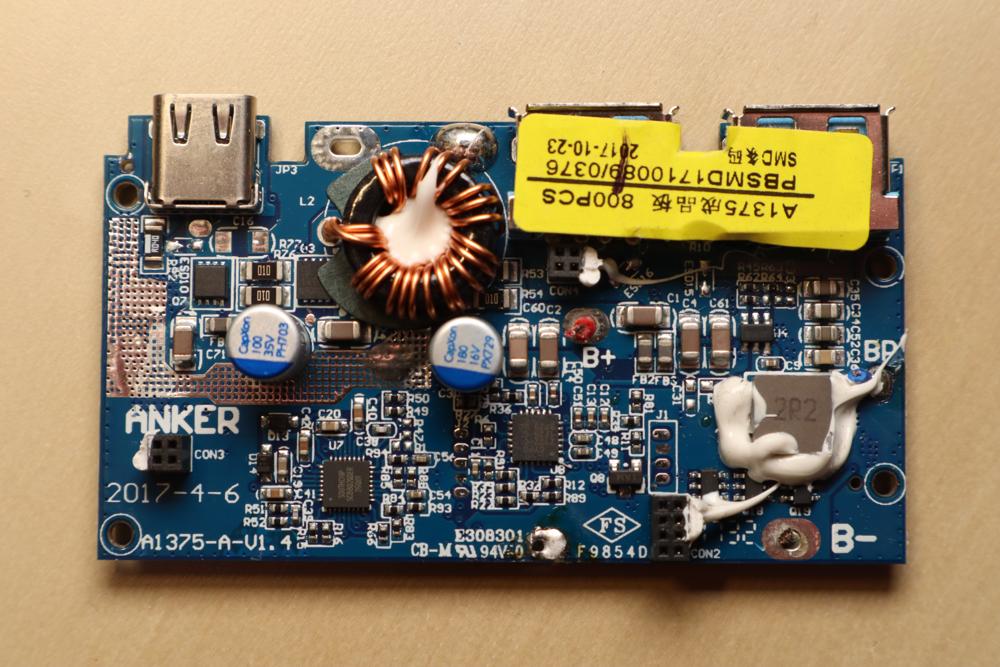

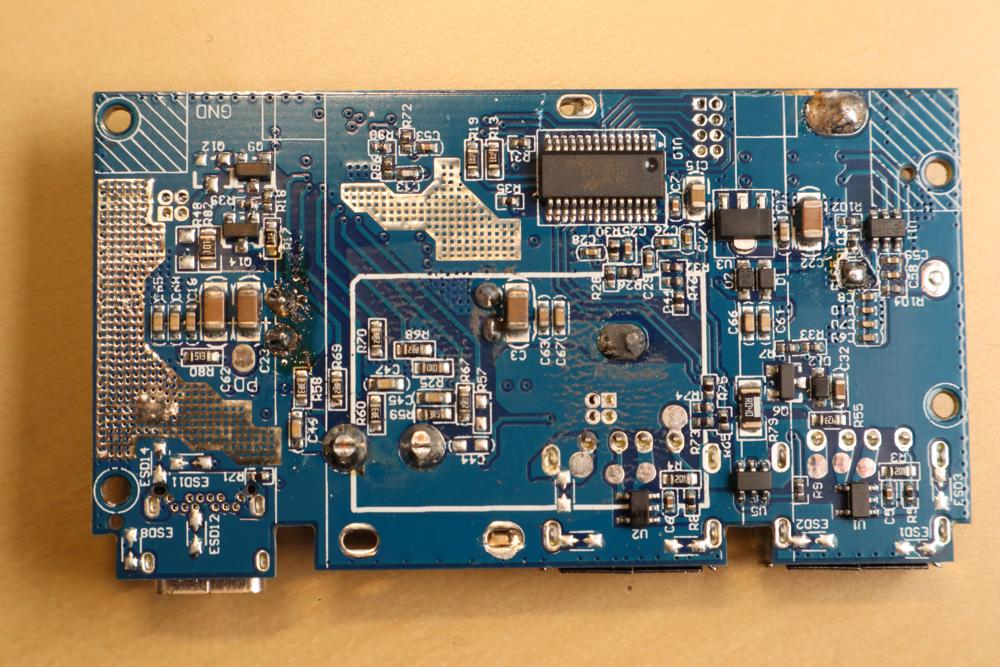

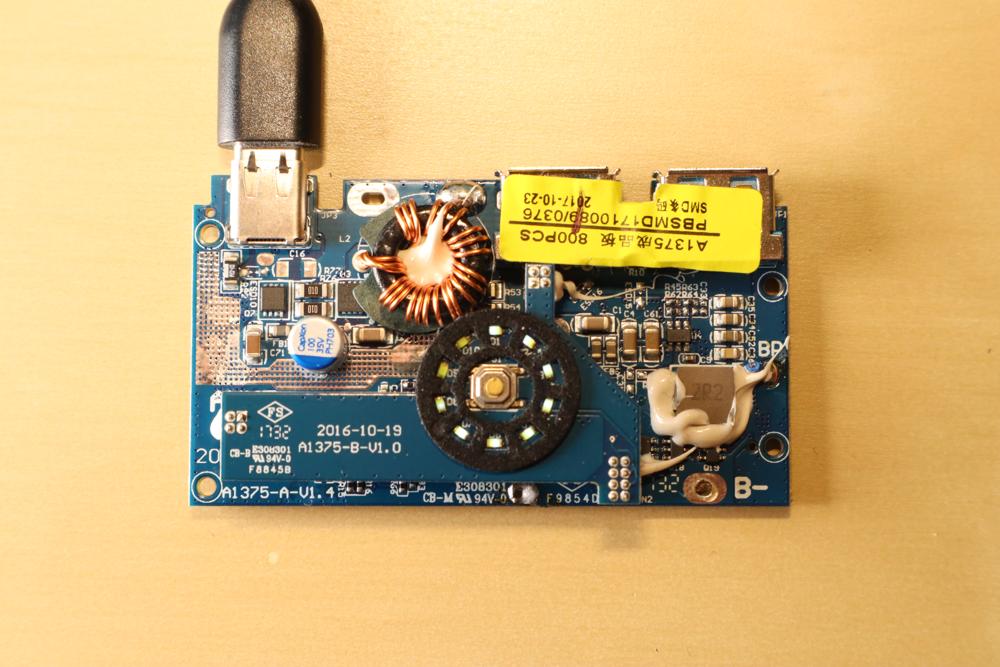

PowerCore 5000 Teardown and Repair

I usually carry a PowerCore 5000 powerbank with an attached USB-A to USB-C cable in my purse. In hindsight, always having the cable attached to it (rather than just when using it) is not the best idea, as it exercises quite some leverage forces on the USB port. So, to little surprise, at some point the output port stopped working unless the cable connected to it was pressed into the right direction.

Luckily, the powerbank is quite easy to open up, repair, and re-assemble.

Caution: This powerbank contains a 18.5 Wh LiIon cell. In normal operation, the (dis)charge PCB is in charge of battery management tasks like short circuit protection. Disassembly exposes the raw 26650 LiIon cell, which likely does not contain a built-in protection circuit. Puncturing, shorting, heating, or otherwise mishandling it can lead to fire and/or explosion. Don't disassemble a powerbank unless you know what you are doing. Don't work with soldering irons close to LiIon cells unless you really know what you are doing.

Teardown

The connector side of the power bank features a glued-on plastic cover on top of a screwed-on plastic cover.

The glued-on cover can be pried open with moderate effort by placing a suitable object between the two plastic covers, revealing the screwed-on second plastic cover.

With the powerbank placed on a table (not held in your hands), you can now take a PH00 screwdriver to release the three screws that hold it in place. Once that is done, simply lift the case up, revealing the actual circuitry (LiIon cell and PCB). If you were holding the powerbank in your hands while removing the screws, the circuitry likely fell out instead.

The innards are quite simple: A single 26650 LiIon cell; a single PCB; and a two-part plastic assembly featuring a button and LED diffusors.

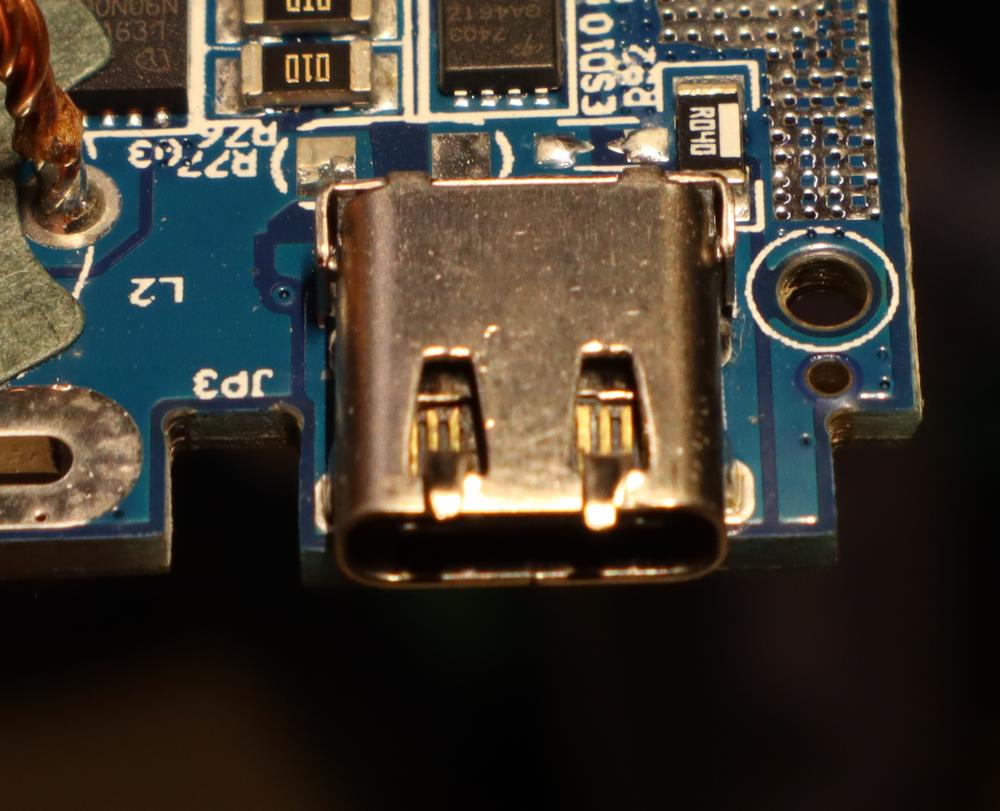

Repair

In my case, the culprit was a loose solder connection between the USB-A output port and the PCB. Nudging the capacitor out of the way and carefully applying some additional solder to its GND and VCC pins solved the issue. Just be wary of the fact that you're operating a soldering iron next to a LiIon cell that does not like triple-digit temperatures…

Re-Assembly

- Slide the button assembly and diffusor back into the case until it locks into place.

- Slide the LiIon cell and PCB assembly back into the case.

- Place the powerbank on its bottom (i.e., so that the USB ports face up).

- Place the plastic cover on top of the PCB assembly and tighten its three screws.

- Put the glued-on top cover back on. It comes with alignment pins; in my case I did not have to apply new glue.

Caffeinated Chocolate

For the past ten years, I have been making caffeinated chocolate in order to always have a source of caffeine with me that i can consume in a pinch.

The recipe is relatively simple, but I never got around to writing it down outside of ephemeral microblog posts. So, here it is: Koffeinschoki.

Benchmarking an AliExpress MT3608 Boost Converter

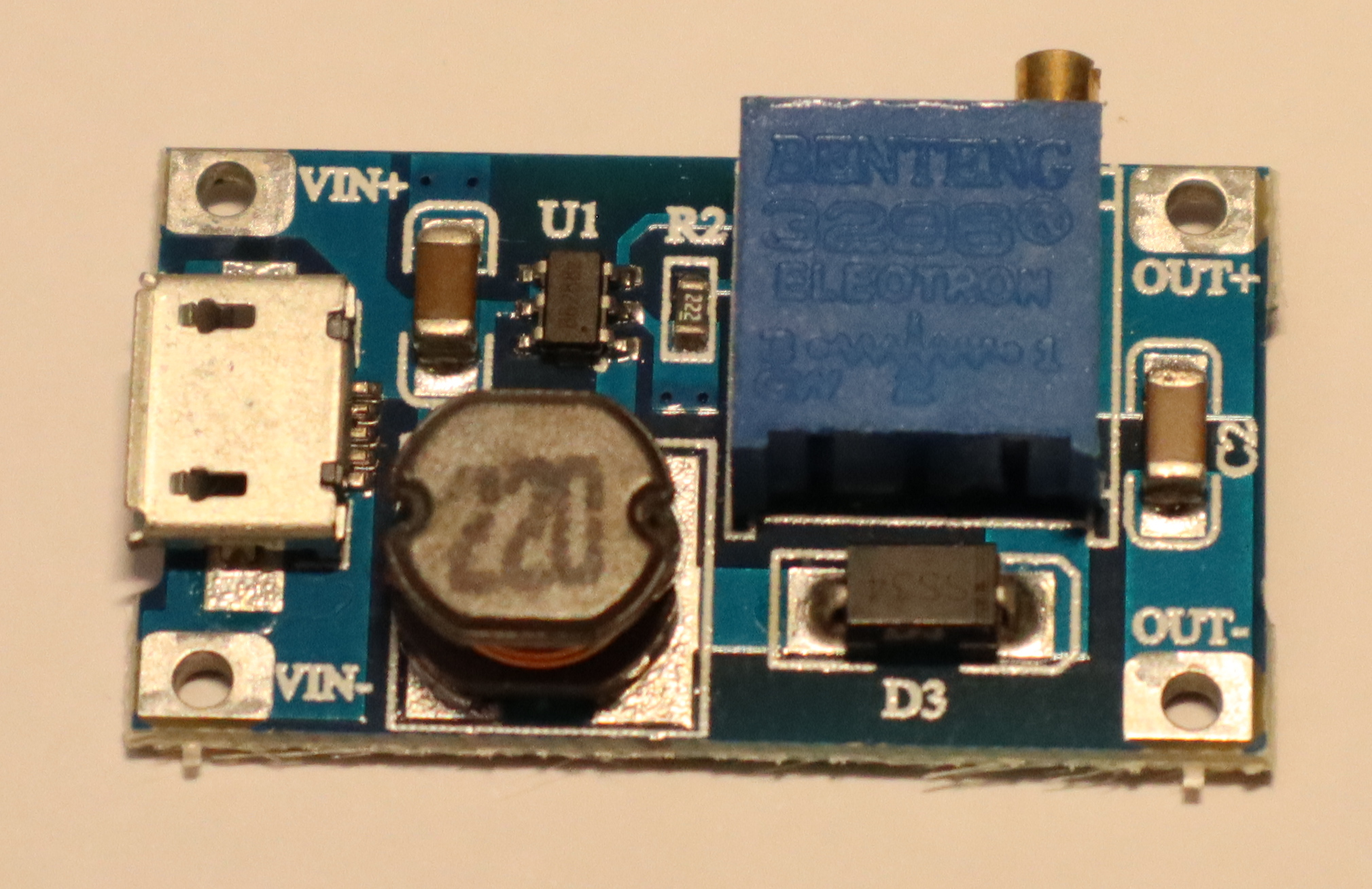

Over the past years, I have obtained a variety of buck and boost converters, mostly from AliExpress and ebay. Now that I finally have a way of characterizing them, I am curious about their performance in practice.

Today's specimen is an MT3608-based step up / boost converter from AliExpress. It's sold with a microUSB input, so boosting 5V from USB to 9V or 12V is a likely application. However, given its low quiescent current, it also seems like a good candidate for boosting ~3.7V from a LiIon battery to 5V for USB.

Specs

Advertised ratings vary. The following conservative estimates might be close to reality.

- Input range: 3V .. 24 V

- Output range: 5V .. 28V

- Maximum input current: 0.8 A (1 A?) continuous, 2 A burst.

- Quiescent current: ~100µA

- Maybe: thermal overload protection

- Maybe: internal 4 A over-current limit

The boost modules I have use a multi-turn potentiometer to configure output voltage.

Application

In this setup, I focused on boosting LiIon voltage to 5V for USB output. LiIon voltage typically ranges from 3.0 to 4.2 V -- I went up to 4.5 V just to gather some more data.

Caution

I took reasonable care to calibrate my readings, but will not give any guarantees. The following results might not be close to reality, and might be affected by knock-off chips and sub-par circuit design.

Output Voltage Stability

Both input voltage and output power of a boost converter can vary over time, especially when powered via a LiIon battery. Its output voltage should remain constant in all cases, or only sag a little under load. Most importantly, it should never exceed its idle output voltage – otherwise, connected devices may break.

Up to about 400 mA output current, the converter is well-behaved. Beyond that (i.e., once its input current exceeds 800mA), its output voltage is all over the place – both below and above the set point. With an observed range of 4.5 to 5.7 V, it is also way outside the USB specification, which states that devices must accept 4.5 to 5.2 V.

So, I'd strongly advise against using this chip to power USB devices that may draw more than a few hundred mA.

At 9V and 12V output, I did not notice issues at up to 400 mA, but did not measure anything beyond that. Also, the measurement setup for these two benchmarks was a bit less accurate.

Efficiency

In low-power operation (no more than a few hundred mA), the converter is quite efficient. Beyond that (i.e., in the unstable output voltage area) its efficiency varies as well.

Further Observations

Once input current exceeds 800mA, the devices I have here emit a relatively loud, high-frequency noise.

TL;DR

Exercise caution to avoid frying USB devices.

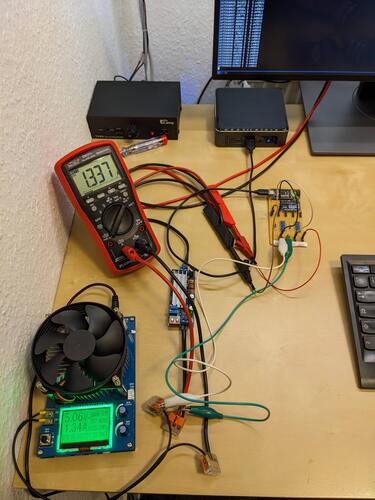

Building a test setup for benchmarking buck/boost converters

I have a growing collection of mostly cheap buck/boost converters and am kinda curious about their efficiency and output voltage stability.

Measuring that typically entails varying input voltage and output current while logging input voltage (V_i), input current (I_i), output voltage (V_o), and output current (I_o).

For each reading, efficiency is then defined as (V_o · I_o) / (V_i · I_i) · 100%.

+-------------+

| |

| Input |

| |

+---+-----+---+

| |

| +-+

| I_i

| +-+

+-V_i-+

| |

+---+-----+---+

| |

| Converter |

| under |

| Test |

| |

+---+-----+---+

| |

+-V_o-+

| +-+

| I_o

| +-+

| |

+---+-----+---+

| |

| Output |

| |

+-------------+

The professional method of obtaining these values would probably involve a Source/Measure Unit (SMU) with 4-wire sensing and remote control to automatically vary input voltage / output current while logging voltage and current readings to a database. I do not have such a device here -- first, they tend to cost €€€€ or even €€€€€, and second, many of those are more at home in the single-digit Watt range. I do, however, have a lab PSU with remote control and access to output voltage and current readings, a cheap electronic load (without remote control), an ADS1115 16-Bit ADC for voltage measurements, and an ATMega328 for data logging. This allows me to manually set a constant output current I_o and then automatically vary the input voltage while logging V_i, I_i, and V_o.

There is just one catch: The ADS1115 cannot measure voltages that exceed its input voltage (VCC, in this case 5V provided via USB). A voltage divider solves this, at the cost of causing a small current to flow through the divider rather than the buck/boost converter under test. In my case, I only had 10kΩ 1% resistors at hand, and used them to build an 8:1 divider for both differential ADS1115 input channels. This way, I can measure up to 40V, with up to 500µA flowing through the voltage divider. Compared to the 100 mA to several Amperes I intend to use this setup with, that is negligible.

V_i + ----+ VCC GND VCC GND

70k | | | |

+--+ +------------+ | | +-----------+ | |

10k +-+A0 +--+ | | +-+ |

V_i - ----+-+ | | | | | |

+--+A1 +-----+ | +----+

V_o + ----+ | ADS1115 | | ATMega328 |

70k+--+A2 SCL+-------+SCL TX+--------to USB-Serial converter

+-+ | | | |

10k +-+A3 SDA+-------+SDA |

V_o - ----+--+ +------------+ +-----------+

Of course, this whole contraption is far from certifiably accurate or ppm-safe, and even less so when looking at the real-world setup on my desk.

I did however find it to be accurate within ±10mV after some calibration, so it is sufficient to determine whether a converter is in the 80% or 90% efficiency neighbourhood and whether it actually outputs the configured 5.2V or decides to go up to 5.5V under certain load conditions. Luckily, that is all I need.

The bottom line here is: If you have sufficiently simple / low-accuracy requirements, cheap components that may already be lying around in some forgotten project drawer can be quite useful. Also, I really like how easy working with the ADS1115 chip is :)

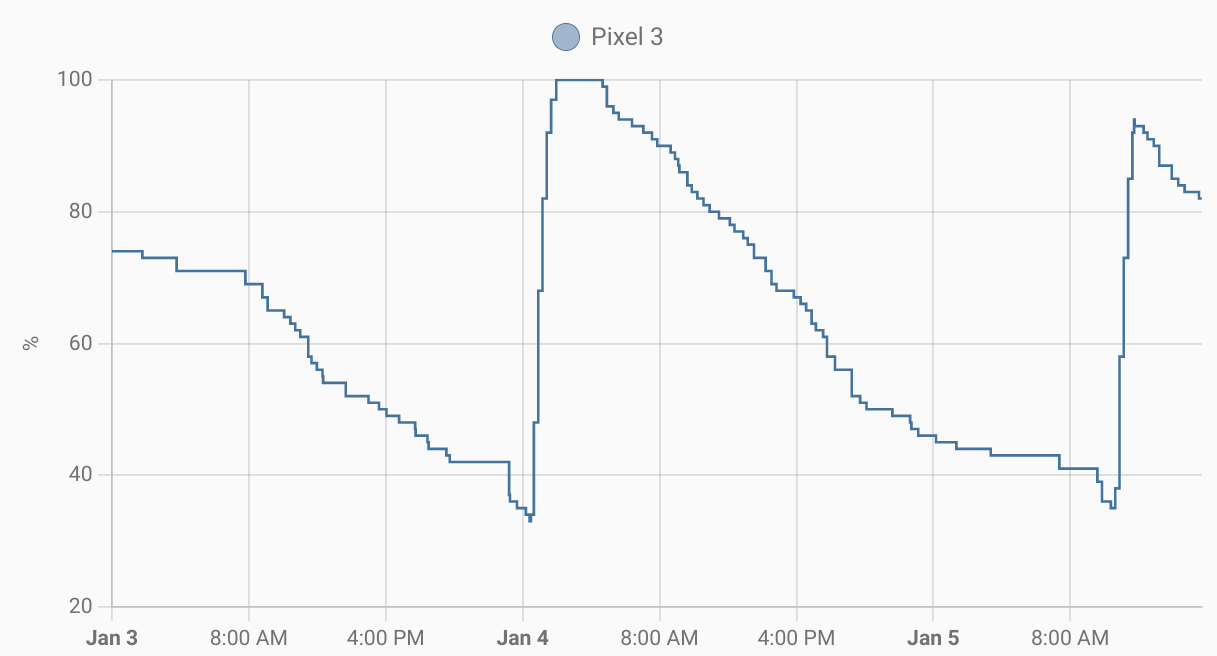

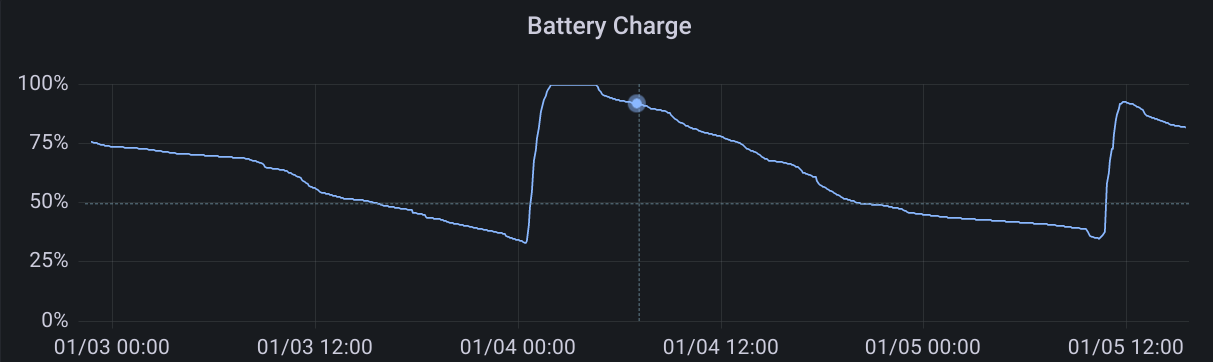

Logging HomeAssistant data to InfluxDB

I'm running a Home Assistant instance at home to have a nice graphical sensor overview and home control interface for the various more or less DIY-ish devices I use. Since I like to monitor the hell out of everything, I also operate an InfluxDB for longer-term storage and fancy plots of sensor readings.

Most of my ESP8266 and Raspberry Pi-based DIY sensors report both to MQTT (→ Home Asisstant) and InfluxDB. For Zigbee devices I'm using a small script that parses MQTT messages (intended for Zigbee2MQTT ↔ Home Assistant integration) and passes them on to InfluxDB. However, there are also devices that are neither DIY nor using Zigbee, such as the storage and battery readings logged by the Home Asisstant app on my smartphone.

Luckily, Home Assistant has a Rest API that can be used to query device states (including sensor readings) with token-based authentication. So, all a Home Assistant to InfluxDB gateway needs to is query the REST API periodically and write the state of all relevant sensors to InfluxDB. For binary sensors (e.g. switch states), this is really all there is to it.

For numeric sensors (e.g. battery charge), especially with an irregular update schedule, the script should take its last update into account. This way, InfluxDB can properly interpolate between data points, producing (IMHO) much prettier graphs than Home Assistant does. If you also want to extend your Home Assistant setup with InfluxDB, c hass to influxdb may be a helpful starting point.

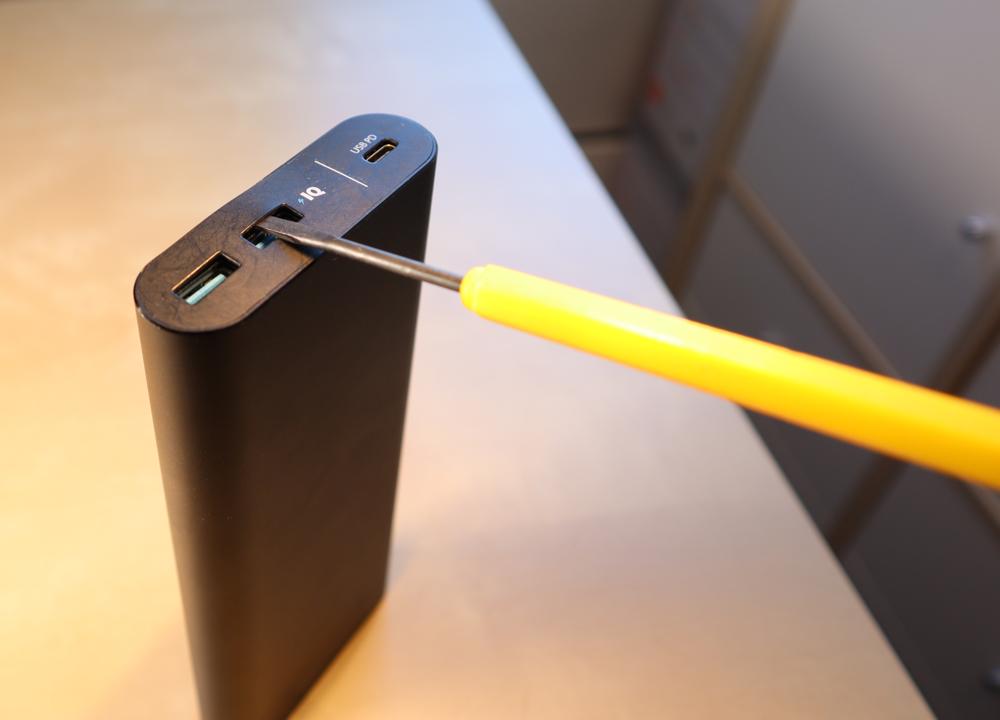

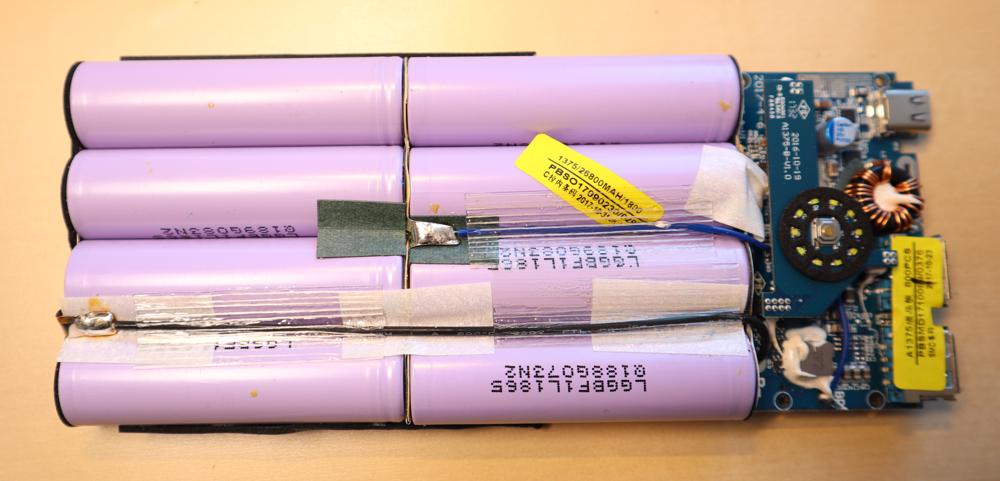

PowerCore+ 26800 Teardown

After nearly five years of service, my PowerCore+ 26800 power bank broke down recently. After a few weeks with an only intermittently working USB-C output, it stopped providing power altogether – and also stopped accepting power to recharge the battery pack, providing a distinct smell of magic smoke and an internal short circuit instead.

As the power bank is out of warranty anyways, this is a good opportunity for a happy little autopsy.

Caution: This powerbank contains nearly 100 Wh worth of LiIon cells. In normal operation, the (dis)charge PCB is in charge of battery management tasks like short circuit prevention. Disassembling the device exposes raw LiIon cells, which typically do not contain separate protection circuitry. Puncturing, shorting, or otherwise mishandling one of those can lead to fire. Don't disassemble a powerbank unless you know what you are doing.

Case Teardown

The top and bottom plastic covers are glued on and can be pried open with moderate effort, revealing four screws each.

After removing the screws and a second (also glued-on) top cover, you can push onto the connector board (top) to coax the cell and PCB assembly out of the case. A good spot for application of force is the plastic surface next to the USB-C port. It's a tight fit, so the assembly won't slide out by itself. Pushing from the bottom won't work.

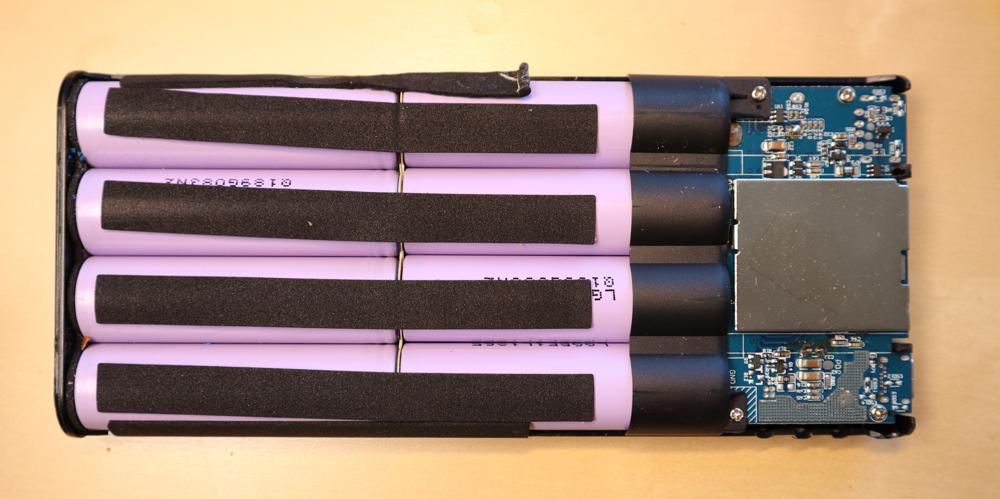

The LiIon cell layout is 2S4P with balancing. The cells are labeled “LGGBF1L1865”, which appears to correspond to LG's INR18650F1L model.

Each cell is rated as follows:

- Nominal capacity: 3.3 Ah at 3.63 V (12 Wh)

- Charge current: 0.3C (975 mA) nominal, 0.5C (1625 mA) maximum, 4.2 V / 50 mA cut-off

- Discharge current: 0.2C (650 mA) nominal, up to 1.5C (4875 mA) maximum, 2.5V cut-off

For the 2S4P pack, this gives:

- Nominal capacity: 13.2 Ah at 7.26 V (96 Wh)

- Charge current: 0.3C (3.9 A) nominal, 0.5C (6.5 A) maximum, 200mA cut-off

- Discharge current: 0.2C (2.6 A) nominal, up to 1.5C (19.5 A) maximum, 5.0V cut-off

For comparison, the powerbank's product specifications state:

- Capacity: 26.8 Ah at 3.6V (96 Wh)

- Input: Up to 27 W (9 V, 3 A) → Charging probably uses less than 0.3C

- Output: Up to 25 W (20 V, 1.25 A) via USB-C + about 20 W (5 V, 2 A) via USB-A → Discharge current is up to 0.5C (6.6 A)

My mostly discharged cells read 3.18 and 3.20V, respectively, so the cell management chip seems to be operating in a rather conservative voltage range. This should be good for longevity.

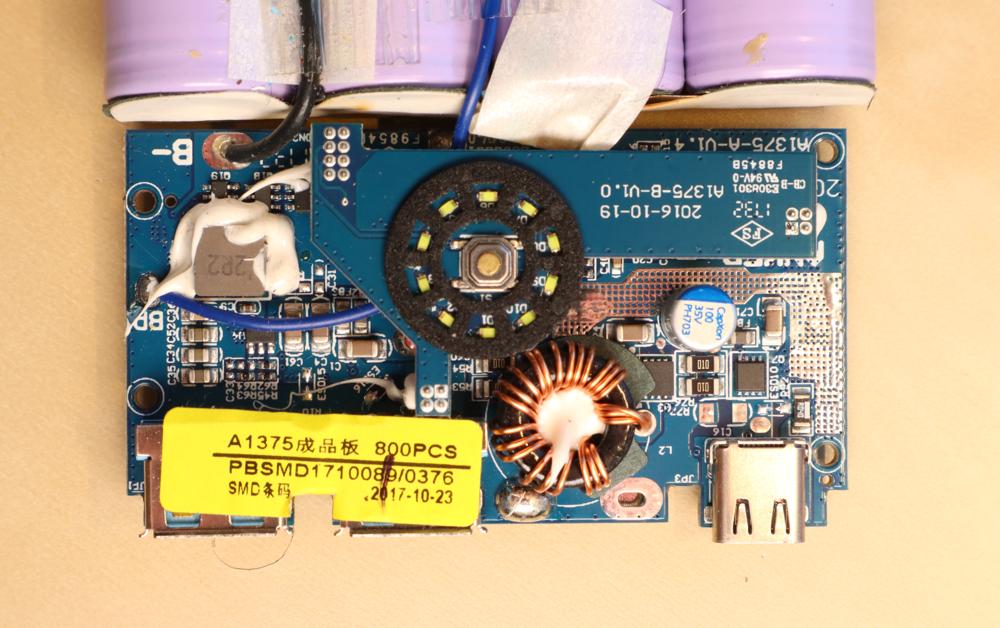

PCB Teardown

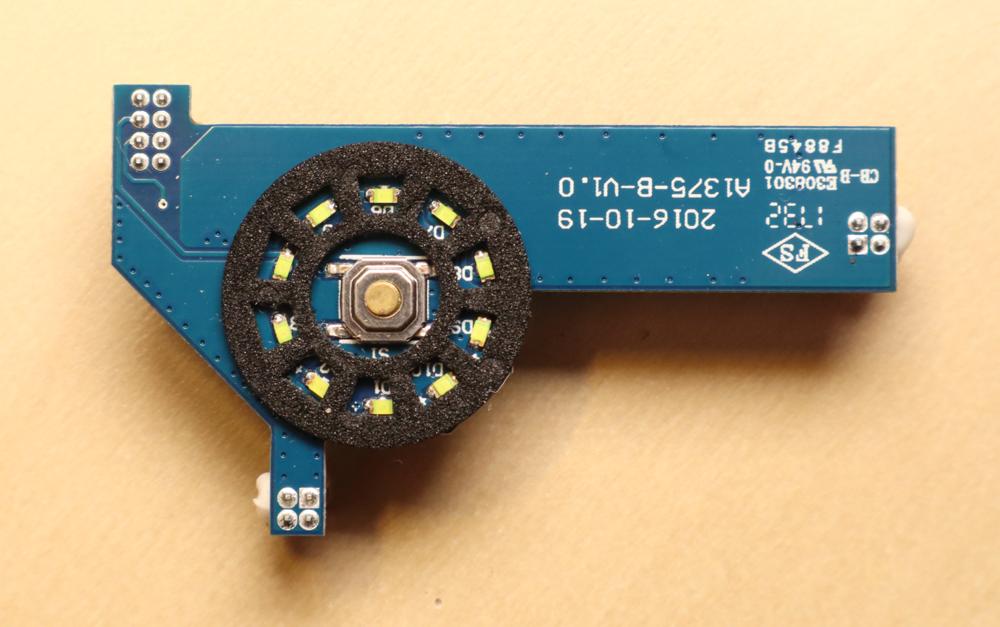

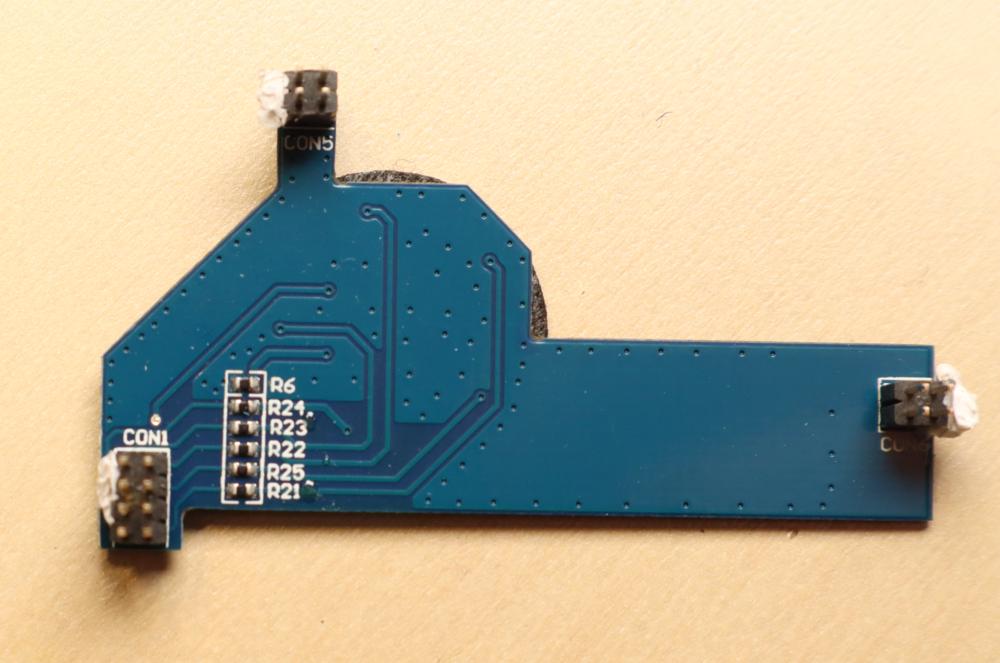

The top PCB is only responsible for LED output and button input.

The bottom PCB includes an SC8802 synchronous, bi-directional, 4-switch buck-boost charger controller and a HT66F319 microcontroller.

The Culprit

Even with disconnected batteries, there's a two ohm short circuit between USB-C VCC and USB-C GND. The culprit turned out to be the USB-C plug itself.

USB-C plugs contain a tiny PCB with contacts on both sides that the cable slides onto. In this case, the lower (recessed, non-contact) part of the PCB is embedded into a metal piece for stability. Over time, the metal piece had moved towards the contacts, eventually causing an electrical connection and thus a short circuit. After moving it back, the power bank is working again. I don't trust the USB-C port anymore, though.

Thoughts on embedded environmental sensors

Over the past few years, I've been frequently working with I²C environmental sensors for measuring temperature, humidity and so on. Here are some thoughts and observations of sensors and breakout boards I made along the way. Note that this is by no means a proper professional review, you should take everything posted here with a grain of salt.

Minimal drivers for all sensors listed here can be found in the multipass project.

Sensors and Datasheet specs

| Sensor | Property | Resolution | Accuracy | Range |

|---|---|---|---|---|

| AM2320 | Temperature [°c] Humidity [%] |

0.1 0.1 |

±0.5 ±3 |

-40 .. 80 0 .. 99.9 |

| BME280 | Temperature [°c] Humidity [%] Pressure [hPa] |

0.01 0.008 0.18 Pa |

±1 @ 0 .. 65 ±3 @ 20 .. 80 ±1 |

-40 .. 85 0 .. 100 300 .. 1100 |

| BME680 | Temperature [°c] Humidity [%] Pressure [hPa] VOC [IAQ] |

0.01 0.008 0.18 Pa 1 |

±1 @ 0 .. 65 ±3 @ 20 .. 80 ±0.6 ±15% ±15 |

-40 .. 85 0 .. 100 300 .. 1100 0 .. ? |

| CCS811 | TVOC [ppb] | 1 | ? | 0 .. 1187 |

| HDC1080 | Temperature [°c] Humidity [%] |

0.1 0.1 |

±0.2 @ 5 .. 60 ±2 |

-40 .. 125 0 .. 100 |

| LM75B | Temperature [°c] | 0.125 | ±2 / ±3 | -55 .. +125 |

Notes

AM2320

- I²C readout is a multi-step process with special timing requirements

- Reported humidity appears to be far too low on some devices

BME280

- max 3.6V; some breakout boards provide LDO and level shifters for 5V operation

- Supports both I²C and SPI; operating mode selected by CSB value

- The breakout boards I am aware of connect VCC to both VDD and VDDIO, making power sequencing with respect to CSB a tad difficult. On some of them, I had to power CSB before providing power to VCC to ensure that the chip starts up in I²C mode.

BME680

- IAQ calculation is only possible with a closed-source BLOB provided by Bosch SensorTec, setting that up on a Raspberry Pi is quite easy though.

CCS811

- In addition to Total Volatile Organic Compound (TVOC), the sensor reports “equivalent CO₂” (eCO₂) data calculated from TVOC. I found these to be unreliable.

LM75B

- readout is trivial

- SMBus compatible: using it on a Raspberry Pi is a simple as

i2cget -y 1 0x48 0x00 w

HDC1080

- reported humidity appears to be a tad too low

Handling USB-serial connection issues on some ESP8266 dev boards

Depending on the configuration of a few GPIO pins during reset, ESP8266 chips can boot into a variety of modes. The most common ones are flash startup (GPIO0 low → execute the program code on a flash chip connected to the ESP8266) and UART download (GPIO0 high → transfer program code from UART to the flash chip).

Most development boards use the serial DTR and RTS lines of their usb-serial converter to allow reset (and boot mode selection) of the ESP8266 by (de)assertion of the DTR/RTS signals. esptool also uses this method when uploading new firmware to the flash.

Usually, things just work™ and an ESP8266 can be used with esptool, nodemcu-uploader, miniterm/screen, and other software. If esptool/nodemcu-uploader work, but miniterm/screen do not (and show repeating gibberish or nothing at all instead), the reason may be unusual DTR/RTS behaviour. I found manual control of DTR/RTS to help in this case:

- Connect to the serial device

- de-assert DTR

- de-assert RTS

- receive a working UART connection

For example, in pyserial-miniterm these signals can be set on startup:

pyserial-miniterm --dtr 0 --rts 0 /dev/ttyUSB0 115200

They can also be toggled at runtime via Ctrl+T Ctrl+D (DTS) and Ctrl+T Ctrl+R (RTS).

$ pyserial-miniterm /dev/ttyUSB0 115200

--- Miniterm on /dev/ttyUSB0 115200,8,N,1 ---

--- Quit: Ctrl+] | Menu: Ctrl+T | Help: Ctrl+T followed by Ctrl+H ---

--- DTR inactive ---

--- RTS inactive ---

Hello, World!

Vindriktning is a cheap USB-C powered particle sensor that uses three colored LEDs to indicate the amount of PM2.5 (i.e., particulate matter with a diameter of less than 2.5µm) as a proxy for indoor air quality. By default, it simply measures PM2.5 and indicates whether air quality is good, not so good, or poor – there is no digital read-out of PM2.5 values.

Luckily, adding an ESP8266 to integrate it with MQTT, HomeAssistant, InfluxDB, or other software is quite easy. However, while most examples use Arduino's C++ dialect for programming, I personally prefer to stick to the NodeMCU Lua firmware on ESP8266 boards. Here is my basic readout code for reference.

function uart_callback(data)

if string.byte(data, 1) ~= 0x16 or string.byte(data, 2) ~= 0x11 or string.byte(data, 3) ~= 0x0b then

print("invalid header")

return

end

checksum = 0

for i = 1, 20 do

checksum = (checksum + string.byte(data, i)) % 256

end

if checksum ~= 0 then

print("invalid checksum")

return

end

pm25 = string.byte(data, 6) * 256 + string.byte(data, 7)

print("pm25 = " .. pm25)

end

function setup_uart()

port = softuart.setup(9600, nil, 2)

port:on("data", 20, uart_callback)

end

setup_uart()

This code assumes that the Vindriktning's TX pin is connected to ESP8266 GPIO4 (labeled "D2" on most esp8266 devboards). As the ESP8266 only has a single RX channel, which we reserve for programming and debugging, I'm using a Software UART implementation. At 9600 baud, that's not an issue.

If you're running a MediaWiki 1.35 with PluggableAuth and LdapAuthentication2, there's two ways of supporting login for LDAP accounts and local accounts.

In LocalSettings.php, set either

$wgPluggableAuth_EnableLocalLogin = true;

or

$LDAPAuthentication2AllowLocalLogin = true;

They have slightly different UI, but work pretty much the same from a login perspective. However, the LDAPAuthentication2 variant does not support local account creation.

So, if you're getting an error message along the lines of "The supplied

credentials could not be used for account creation" when trying to register

a local account on your MediaWiki instance, you may need to set

$wgPluggableAuth_EnableLocalLogin = true; in your LocalSettings.php.

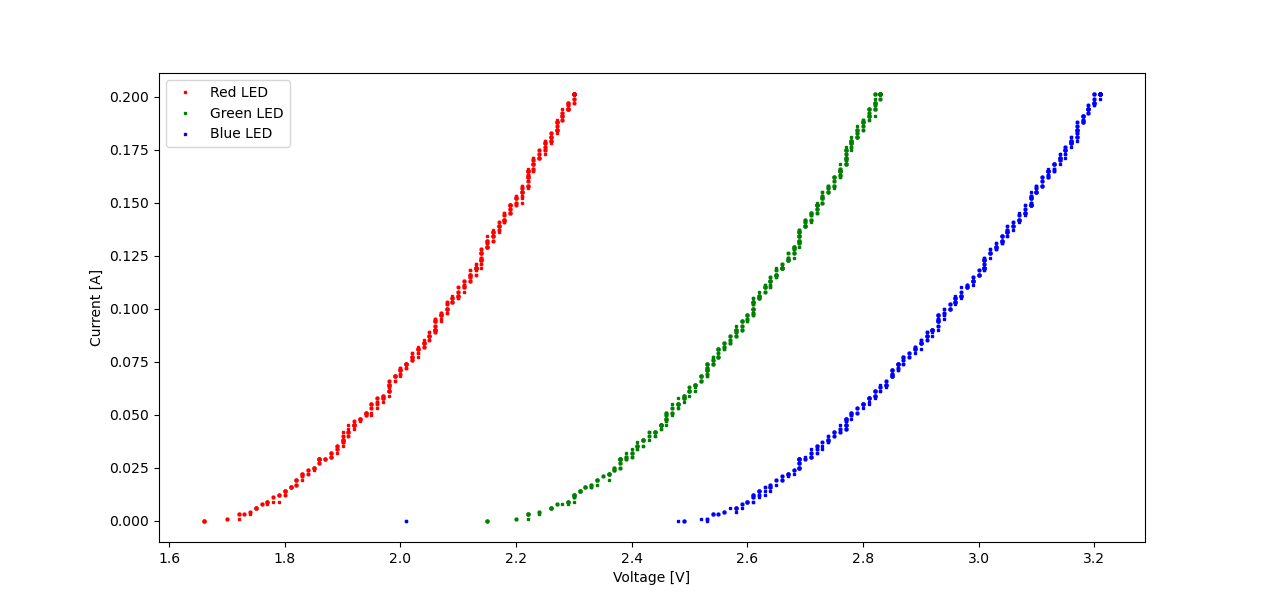

Measurement Automation with Korad Bench Supplies

A few years back, I bought an RND Lab RND 320-KA3005P bench power supply both for its capability of delivering up to 30V @ 5A, and for its USB serial control channel. The latter can be used to both read out voltage/current data and change all settings which are accessible from the front panel, including voltage and current limits.

This weekend, I finally got around to writing a proper Python tool for controlling and automating it: korad-logger works with most KAxxxxP power supplies, which are sold under brand names such as Korad or RND Lab.

Now, basic characteristics such as I-V curves are trivial to generate. For instance, here's the I-V curve for an unknown RGB power LED.

It's based on three calls of the following command.

bin/korad-logger --voltage-limit 5 --current-range '0 0.2 0.001' --save led-$color.log 210

At a sample rate of about 10 Hz and 1 mA / 10 mV resolution, the bench supply won't perform miracles. Nevertheless, it is quite handy. If you measure only current (e.g. in CV mode), or only voltage (CC mode), you can even get near 20 Hz.

Avoiding accidental bricks of MSP430FR launchpads

The MSP430FR launchpad series is a pretty nifty tool both for research and teaching. You get an ultra-low-power 16-bit microcontroller, persistent FRAM, and energy measurement capabilities, all for under $20.

Unfortunately, especially when it comes to teaching, there's one major drawback: Out of bound memory accesses which are off by several thousand bytes can permanently brick the CPU. This typically happens either due to a buffer overflow in FRAM or a stack pointer underflow (i.e., stack overflow) in SRAM.

This issue recently bit one of my students and it turns out that it could have been avoided. So I'll give a quick overview of symptoms, cause, and protection against it, both as a reference for myself and for others.

Symptoms

A bricked MSP430FR launchpad is no longer flashable or erasable via JTAG or BSL. Attempts to control it via MSP Flasher fail with error 16: "The Debug Interface to the device has been secured".

* -----/|-------------------------------------------------------------------- *

* / |__ *

* /_ / MSP Flasher v1.3.20 *

* | / *

* -----|/-------------------------------------------------------------------- *

*

* Evaluating triggers...

* Invalid argument for -i trigger. Default used (USB).

* Checking for available FET debuggers:

* Found USB FET @ ttyACM0 <- Selected

* Initializing interface @ ttyACM0...done

* Checking firmware compatibility:

* FET firmware is up to date.

* Reading FW version...done

* Setting VCC to 3000 mV...done

* Accessing device...

# Exit: 16

# ERROR: The Debug Interface to the device has been secured

* Starting target code execution...done

* Disconnecting from device...done

*

* ----------------------------------------------------------------------------

* Driver : closed (Internal error)

* ----------------------------------------------------------------------------

*/

Unless you know the exact memory pattern written by the buffer overflow (and it specifies a reasonable password length), there is no remedy I'm aware of. The CPU is permanently bricked.

Cause

MSP430FR CPUs use a unified memory architecture: Registers, volatile SRAM, and persistent FRAM are all part of the same address space. This includes fuses (“JTAG signatures”) used to secure the device by either disabling JTAG access altogether or protecting it with a user-defined password.

While write access to several CPU registers requires specific passwords and timing sequences to be observed, this is not the case for the JTAG signatures. Change them, reset the CPU, and it's game over.

The JTAG signatures reside next to the reset vector and interrupt vector at the

16-bit address boundary, within the address range from 0xff80 to 0xffff. On

MSP430FR5994 CPUs, the (writable!) text segment ends at 0xff7f and SRAM is

located in 0x1c00 to 0x3bff. So, a small buffer overflow in a persistent

variable (located in FRAM) or a significant stack pointer underflow (starting in

SRAM, growing down, and wrapping from 0x0000 to 0xffff) may overwrite the

JTAG signatures with arbitrary data.

Protection

MSP430FR CPUs contain a bare-bones Memory Protection Unit. It can partition the

address space into up to three distinct regions with 1kB granularity and

enforce RWX settings for each region. So, if we disallow writes to the 1kB

region from 0xfc00 to 0xffff, we no longer have to worry about accidentally

overwriting the JTAG signatures. To do so, place the following lines in your

startup code:

MPUCTL0 = MPUPW;

MPUSEGB2 = 0x1000; // memory address 0x10000

MPUSEGB1 = 0x0fc0; // memory address 0x0fc00

MPUSAM &= ~MPUSEG2WE; // disallow writes

MPUSAM |= MPUSEG2VS; // reset CPU on violation

MPUCTL0 = MPUPW | MPUENA;

MPUCTL0_H = 0;

Note that this disallows writes not just to the JTAG signatures, but also to

part of the text segment as well as the interrupt vector table. If an

application dynamically alters interrupt vector table entries or uses

persistent FRAM variables at addresses beyond 0xfbff, this method will break

the application. Most practical use cases shouldn't run into this issue.

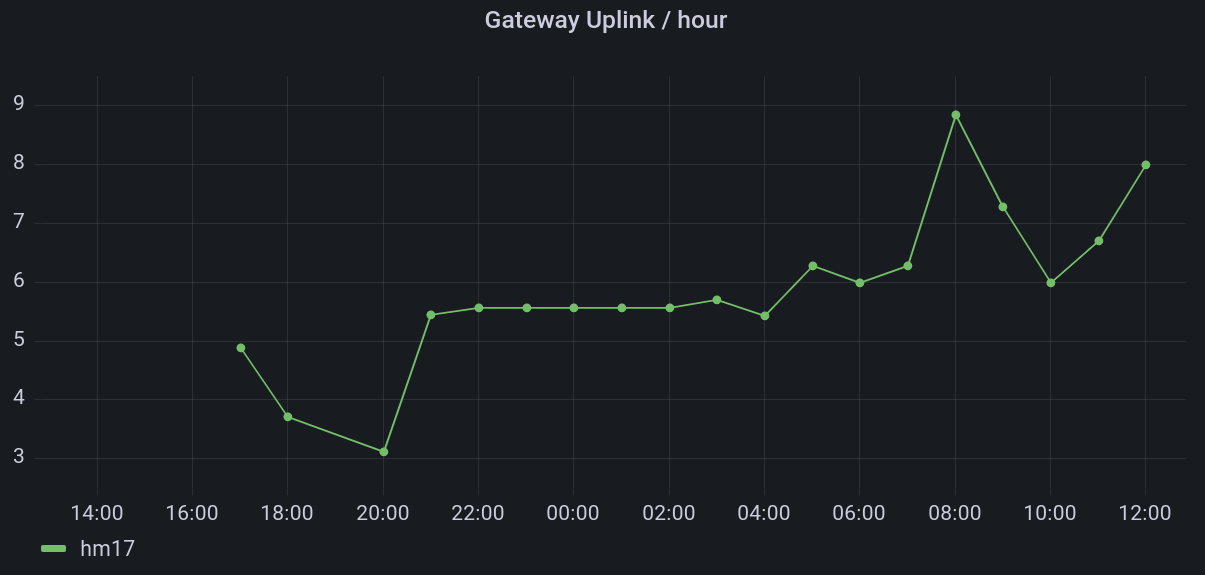

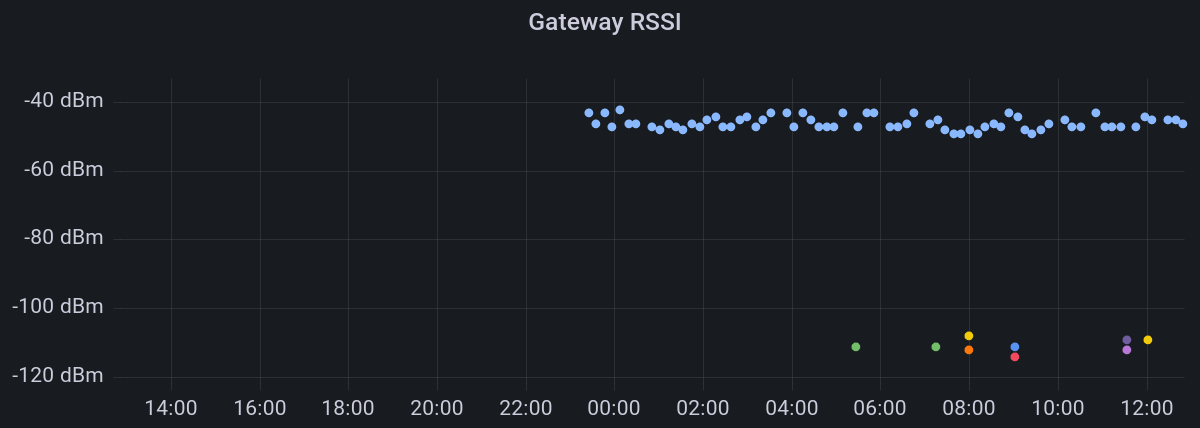

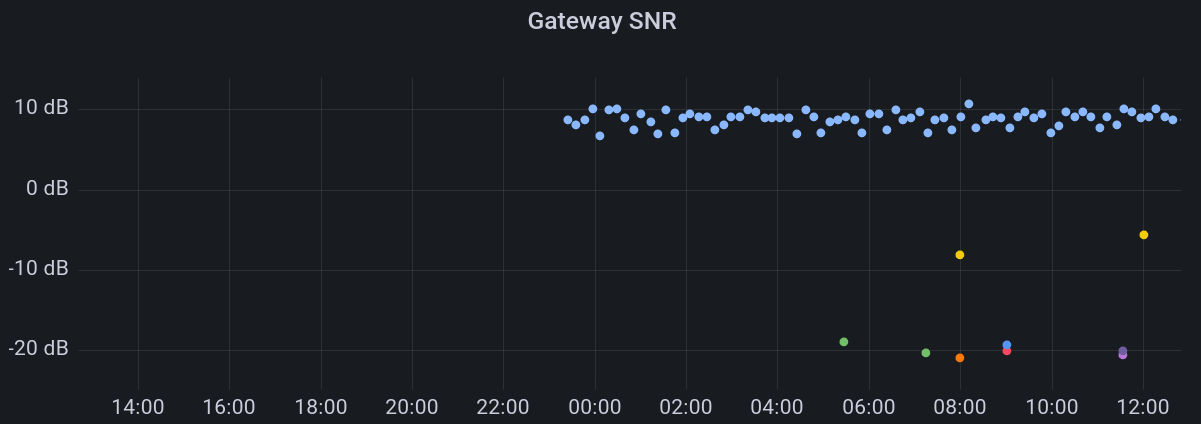

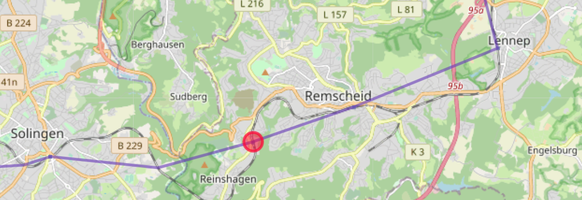

Monitoring The Things Indoor Gateway (TTIG) via InfluxDB

The Things Indoor Gateway (TTIG) is an affordable LoRaWAN gateway, ideal for getting started with The Things Network or other setups. Here are two ways of monitoring its radio performance and feeding data into e.g. InfluxDB, so you can display the results in a small Grafana dashboard.

TTN Gateway Server API

The Things Stack's Gateway Server API allows requesting uplink and downlink stats of a gateway if you have an appropriate API key.

First, you need to navigate to the gateway page in your TTN console and create a new API key with “View gateway status” rights. Using this key and your gateway ID, you can request connection statistics:

> curl -H "Authorization: Bearer GATEWAY_KEY" \

https://eu1.cloud.thethings.network/api/v3/gs/gateways/GATEWAY_ID/connection/stats | jq

{

"last_uplink_received_at": "2021-09-12T11:00:41.490891018Z",

"uplink_count": "115",

"last_downlink_received_at": "2021-09-12T00:05:45.008438327Z",

"downlink_count": "2",

}

With a cronjob running every few minutes, you can pass the data to InfluxDB. I'm using the following Python script for this:

#!/usr/bin/env python3

# vim:tabstop=4 softtabstop=4 shiftwidth=4 textwidth=160 smarttab expandtab colorcolumn=160

import requests

def main(auth_token, gateway_id):

response = requests.get(

f"https://eu1.cloud.thethings.network/api/v3/gs/gateways/{gateway_id}/connection/stats",

headers={

"Authorization": "Bearer {auth_token}"

},

)

data = response.json()

uplink_count = data.get("uplink_count", 0)

downlink_count = data.get("downlink_count", 0)

requests.post(

"http://influxdb:8086/write?db=hosts",

f"ttn_gateway,name={gateway_id} uplink_count={uplink_count},downlink_count={downlink_count}",

)

if __name__ == "__main__":

main("GATEWAY_KEY", "GATEWAY_ID")

It's also possible to assign “Read gateway traffic” rights to an API key. I didn't play around with that yet.

USB-UART Logs

By soldering a 1kΩ resistor onto R86 on the TTIG PCB, you can enable its built-in CP2102N USB-UART converter. This allows you to use the USB port not just for power, but also for observing its debug output. See Xose Pérez' Hacking the TTI Indoor Gateway blog post for details.

With this hack, connecting the TTIG to a linux computer capable of sourcing up

to 900mA via USB will cause a /dev/ttyUSB serial interface to apper. You can

use tools such as screen or picocom with a baud rate of 115200 to observe

the output. Apart from memory usage and time synchronization logs, it includes

a line similar to the following one for each received LoRa transmission:

RX 868.3MHz DR5 SF7/BW125 snr=9.0 rssi=-46 xtime=0x43000FB11517C3 - updf mhdr=40 DevAddr=01234567 FCtrl=00 FCnt=502 FOpts=[] 0151B4 mic=-1842874694 (15 bytes)

So you can log statistics about Received Signal Strength, Signal-to-Noise Ratio, Spreading Factor and similar.

The Python script I'm using for this is somewhat more involved:

#!/usr/bin/env python3

# vim:tabstop=4 softtabstop=4 shiftwidth=4 textwidth=160 smarttab expandtab colorcolumn=160

import re

import requests

import serial

import serial.threaded

import sys

import time

class SerialReader(serial.threaded.Protocol):

def __init__(self, callback):

self.callback = callback

self.recv_buf = ""

def __call__(self):

return self

def data_received(self, data):

try:

str_data = data.decode("UTF-8")

self.recv_buf += str_data

lines = self.recv_buf.split("\n")

if len(lines) > 1:

self.recv_buf = lines[-1]

for line in lines[:-1]:

self.callback(str.strip(line))

except UnicodeDecodeError:

pass

# sys.stderr.write('UART output contains garbage: {data}\n'.format(data = data))

class SerialMonitor:

def __init__(self, port: str, baud: int, callback):

self.ser = serial.serial_for_url(port, do_not_open=True)

self.ser.baudrate = baud

self.ser.parity = "N"

self.ser.rtscts = False

self.ser.xonxoff = False

try:

self.ser.open()

except serial.SerialException as e:

sys.stderr.write(

"Could not open serial port {}: {}\n".format(self.ser.name, e)

)

sys.exit(1)

self.reader = SerialReader(callback=callback)

self.worker = serial.threaded.ReaderThread(self.ser, self.reader)

self.worker.start()

def close(self):

self.worker.stop()

self.ser.close()

if __name__ == "__main__":

def parse_line(line):

match = re.search(

"RX ([0-9.]+)MHz DR([0-9]+) SF([0-9]+)/BW([0-9]+) snr=([0-9.-]+) rssi=([0-9-]+) .* DevAddr=([^ ]*)",

line,

)

if match:

requests.post(

"http://influxdb:8086/write?db=hosts",

data=f"ttn_rx,gateway=GATEWAY_ID,devaddr={match.group(7)} dr={match.group(2)},sf={match.group(3)},bw={match.group(4)},snr={match.group(5)},rssi={match.group(6)}",

)

monitor = SerialMonitor(

"/dev/ttyUSB0",

115200,

parse_line,

)

try:

while True:

time.sleep(60)

except KeyboardInterrupt:

monitor.close()

EFA-APIs mit JSON nutzen

Die meisten deutschen Fahrplanauskünfte nutzen entweder EFA ("Elektronische FahrplanAuskunft") oder HAFAS ("HAcon Fahrplan-Auskunfts-System"). Die meisten EFA-Instanzen wiederum bringen mittlerweile native JSON-Unterstützung mit, so dass sie leicht von Skripten aus nutzbar sind. JSON-APIS wie die von https://vrrf.finalrewind.org sind damit weitgehend obsolet.

Hier ein Python-Beispiel für https://efa.vrr.de:

#!/usr/bin/env python3

import aiohttp

import asyncio

from datetime import datetime

import json

class EFA:

def __init__(self, url, proximity_search=False):

self.dm_url = url + "/XML_DM_REQUEST"

self.dm_post_data = {

"language": "de",

"mode": "direct",

"outputFormat": "JSON",

"type_dm": "stop",

"useProxFootSearch": "0",

"useRealtime": "1",

}

if proximity_search:

self.dm_post_data["useProxFootSearch"] = "1"

async def get_departures(self, place, name, ts):

self.dm_post_data.update(

{

"itdDateDay": ts.day,

"itdDateMonth": ts.month,

"itdDateYear": ts.year,

"itdTimeHour": ts.hour,

"itdTimeMinute": ts.minute,

"name_dm": name,

}

)

if place is None:

self.dm_post_data.pop("place_dm", None)

else:

self.dm_post_data.update({"place_dm": place})

departures = list()

async with aiohttp.ClientSession() as session:

async with session.post(self.dm_url, data=self.dm_post_data) as response:

# EFA may return JSON with a text/html Content-Type, which response.json() does not like.

departures = json.loads(await response.text())

return departures

async def main():

now = datetime.now()

departures = await EFA("https://efa.vrr.de/standard/").get_departures(

"Essen", "Hbf", now

)

print(json.dumps(departures))

if __name__ == "__main__":

asyncio.get_event_loop().run_until_complete(main())

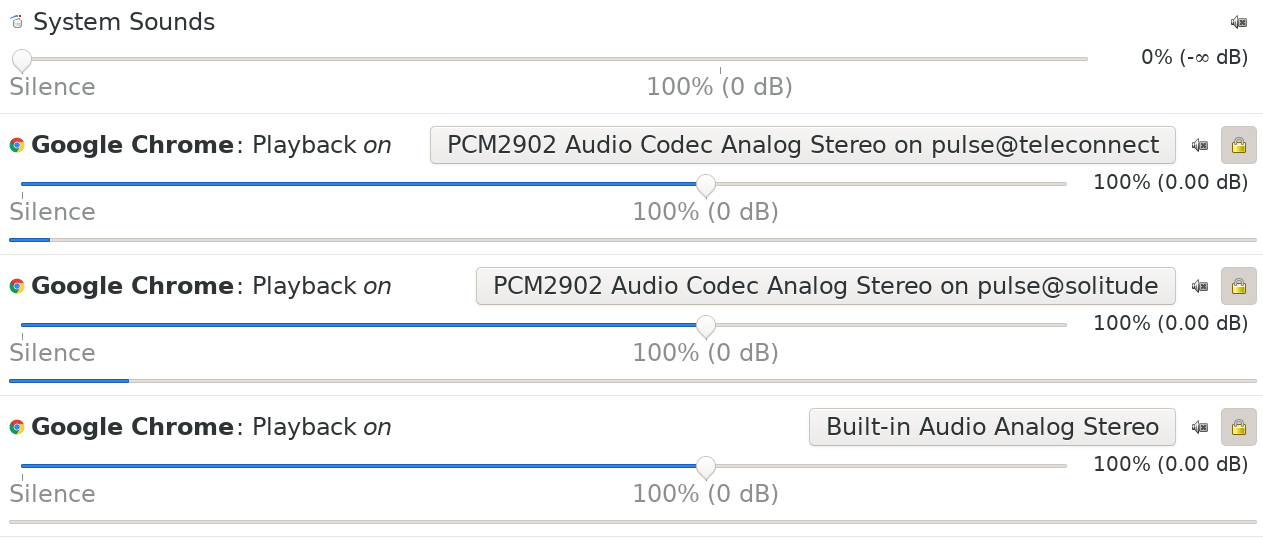

On-demand playback on a remote pulseaudio sink

Setting PULSE_SERVER forwards the entire system audio to a remote (tcp)

network sink. A more fine-granular solution (with control on stream- instead

of system level) is almost as easy, thanks to module-tunnel-sink:

pacmd load-module module-tunnel-sink server=192.168.0.195

Now you can select the remote sink for individual streams (or turn it into the default / fallback one) and, for instance, have two different videos play back on two different remote sinks while your messenger's notification sounds remain local.

Lokalisierung fahrender Züge per GPS

Wer DBF aus einem fahrenden Zug heraus aufruft, kann seit heute nur per GPS-Position Informationen zu diesem Zug erhalten – zumindest in den meisten Fällen und mit ein paar Einschränkungen. Ich möchte hier das Konzept dahinter erläutern.

Da über GTFS derzeit nur Solldaten zur Verfügung stehen und das HAFAS Zugradar lediglich nach beliebigen Fahrten im Umkreis sucht, ohne dabei konkrete Strecken zu berücksichtigen, greift die DBF-Implementierung nicht darauf zurück.

Stattdessen hat sie als einzige API-Abhängigkeit die Ankunfts-/Abfahrtstafel für Bahnhöfe und berechnet alles weitere selbst. Auch bei Abschaltung des HAFAS Zugradars bleibt sie funktionsfähig.

Abbildung von Positionen auf Nachbarstationen

Kern der Lokalisierung ist eine Datenbank, die Deutschland in ca. 200m × 300m große Rechtecke einteilt¹. Für jedes Rechteck, das mindestens eine Bahnstrecke enthält, listet sie alle Bahnhöfe auf, die von einem diese Bahnstrecke passierenden Zug planmäßig als nächstes angefahren werden. Eine Position auf dem Tunnel durch den Teutoburger Wald bei Lengerich enthält beispielsweise unter anderem

- Lengerich (Westf) und Natrup-Hagen (RB66),

- Münster (Westf) Hbf und Osnabrück Hbf (IC/ICE Linien 30 und 31) sowie

- Essen Hbf und Hamburg Hbf (IC-Verbindung Hamburg – Ruhrgebiet ohne Unterwegshalte).

Die Datenbank beruht derzeit überwiegend auf dem von NVBW bereitgestellten SPNV GTFS-Liniennetzplan. Dieser enthält erfreulicherweise auch RE- und RB-Linien außerhalb von Baden-Württembeg. Erweitert wird sie mit einer (leider unfreien und unvollständigen) Menge an IC/ICE- und S-Bahn-Verbindungen. Für Hinweise zu weiteren offenen Datenquellen mit Liniennetzangaben bin ich dankbar.

Bestimmung von Zugkandidaten

Auf Basis einer GPS-Position werden zunächst die Nachbarstationen aus der Datenbank geholt und dann die Ankünfte der nächsten zwei Stunden an jeder Station abgefragt. Dieser Vorgang kann bei einer großen Menge an Stationen einige Sekunden dauern, da die Abfragen nicht parallel stattfinden. Zwar wäre die dadurch ausgelöste zusätzliche Last verglichen mit den restlichen (durch Menschen verursachten) HAFAS-Anfragen noch nicht einmal messbar, zu viele parallele Anfragen von einer einzigen IP dürften aber dennoch nicht gerne gesehen werden.

Für jede Zugfahrt sind Soll- und Ist-Zeit der Ankunft an der angefragten Station sowie die Namen und Soll-Abfahrtszeiten aller vorherigen Stationen bekannt. Züge, die an mehreren der angefragten Stationen verkehren, sind mehrfach vorhanden und werden zu einer einzigen Zugfahrt vereinigt. Nun geht es daran, für jeden Zug abzuschätzen, ob er sich gerade an der angefragten Position befinden könnte oder nicht.

Da die Datenbank mit Paaren von Stationen gefüttert wird, fliegt zunächst jeder Zug raus, der nur eine der angefragten Stationen passiert. Bei solchen Zügen ist sehr wahrscheinlich, dass sie die gesuchte Position auf ihrer Strecke nicht passieren. Anschließend wird für jeden Zug mit Hilfe der (bekannten) Verspätung an der angefragten Station die (unbekannte) verspätung an den vorherigen Unterwegshalten geschätzt und anhand dieser Echtzeitdaten bestimmt, zwischen welchen beiden Unterwegshalten er sich gerade befindet. Ebenso wird für jedes Paar von Unterwegshalten die Entfernung zwischen der angefragten Position und der Luftlinie zwischen den Halten bestimmt.

Jetzt fliegen alle Züge, deren aktuelle geschätzte Position sich nicht zwischen dem Paar von Unterwegshalten mit der kürzesten Entfernung zur angefragten Position befindet. Denn diese sind gerade sehr wahrscheinlich nicht auf dem richtigen Streckenabschnitt. Ebenso werden Züge verworfen, die sich noch an der Startstation befinden und nicht innerhalb der nächsten fünf Minuten losfahren. Eine S-Bahn, die erst in einer Stunde losfährt, ist wohl kaum gerade auf einer Bahnstrecke unterwegs oder auch nur einstiegsbereit am Bahnsteig.

Für die verbleibenden Züge wird die aktuelle Position auf der Luftlinie zwischen ihren Halten geschätzt. Dabei gehe ich von konstanter Geschwindigkeit aus, da ich keine Beschleunigungsprofile oder Streckengeschwindigkeiten kenne. Anschließend werden die Züge sortiert nach der Entfernung zur gesuchten Position aufgelistet.

Genauere Positionsabschätzung

Mit Verwendung des tatsächlichen Linienverlaufs einer Fahrt anstelle der Luftlinie zwischen Unterwegshalten ließe sich die Position noch viel genauer abschätzen und insbesondere bestimmen, ob die Route eines Zuges überhaupt die gesuchte Position enthält – wenn nicht, kann er direkt verworfen werden, auch wenn er nur wenige km neben der gesuchten Position auf einer anderen Bahnstrecke entlangfährt.

Diese Verbesserung ist derzeit nicht implementiert, da das die Menge notwendiger API-Anfragen nochmals erhöhen würde und ich zunächst testen möchte, ob die Ergebnisse mit linearer (Luftlinien-)Interpolation bereits hinreichend nützlich sind. Außerdem kommt es regelmäßig vor, dass das HAFAS die Linie selbst falsch einschätzt und z.B. einen ICE auf einer nicht elektrisierten Nebenbahn (statt der einige km entfernt verlaufenden, aber insgesamt längeren, elektrisierten Hauptbahn) platziert.

Ebenso wäre es auf Dauer interessant, anstelle der Entfernung zur Position die Zeit bis zum Erreichen (oder seit dem Erreichen) der Position als Gütemaß zu verwenden. S-Bahnen und ICE sind ja durchaus unterschiedlich schnell unterwegs. Das steht noch auf der Todo-Liste.

Quelltext

Die Implementierung ist noch ein wenig frickelig und undokumentiert, aber selbstverständlich auf GitHub verfügbar: derf/geolocation-to-train.

Fußnoten

¹ Der Einfachheit halber werden auf drei Nachkommastellen gerundete GPS-Koordinaten genutzt. Das resultierende Gitternetz ist unseren Breitengeraden nicht quadratisch.

Building Python3 Bindings for libsigrok

The build instructions on the sigrok

Wiki only work for Python2, which

is past its end of life date. To build libsigrok with Python bindings for

Python3, you need to set PYTHON=python3 when running configure.

The dependency list is also slightly different:

sudo apt-get install git-core gcc g++ make autoconf autoconf-archive \

automake libtool pkg-config libglib2.0-dev libglibmm-2.4-dev libzip-dev \

libusb-1.0-0-dev libftdi1-dev check doxygen python3-numpy \

python3-dev python-gi-dev python3-setuptools swig default-jdk

"python-gi-dev" is not a typo -- the package covers both Python2 and Python3.

Side note: Installing libserialport-dev instead of building your own version as documented on the Wiki seems to work fine.

Packaging Perl Modules for Debian in Docker

A .deb package is an easy solution for distributing Perl modules to

Debian-based systems. Unlike manual installation using Module::Build, it does

not require re-installation whenever the perl minor version changes. Unlike

project-specific cpanm or carton setups, the module is available

system-wide and can easily be used in random Perl scripts which are not bound

to a project repository.

The Debian package dh-make-perl (also known as cpan2deb) does a good job

here. In many cases, creating a personal package for a Perl module is as easy

as cpan2deb Acme::Octarine. Delegating the build process to Docker may be

useful if you do not have a Debian build host available and would rather avoid

having the build process depend on the (probably not well-defined) state of

your dev machine.

For CPAN modules, all you need is a Debian container with dh-make-perl. Using

this container, run cpan2deb and extract the resulting .deb. You can find a

Dockerfile and some scripts for this task in my

docker-dh-make-perl

repository. The Dockerfile is used to create a dh-make-perl image (so you don't

need to install dh-make-perl in a fresh Debian image whenever you build a

module). scripts/makedeb-docker-helper builds the package inside the

container and copies it to the out/ directory, and scripts/makedeb-docker

orchestrates the process.

Note: A package generated this way is suitable for personal use. It is not fit for inclusion in the Debian package repository. As all Debian packages must have an author, you need to set the DEBEMAIL and DEBFULLNAME environment variables to appropriate values. Feel free to extend the Dockerfile and scripts as you see fit – the repository is meant to provide a starting point only.

For non-CPAN content (e.g. if you are a module author and do not want to wait for your freshly uploaded release to appear on CPAN, or if you need to build a patched version of a CPAN module), the process is slightly more involvevd. It requires

- additional bind mounts (

docker run -v "${PWD}:/orig:ro") to copy the module content into the container, - a manually provided version (in my case via

git describe --dirty), and - disabling module signing (unless you pass your GPG keyring to the container).

I also manually specify the packages needed for building and testing. I assume

that this is not needed and can be performed automatically by dh-make-perl

--install-deps --install-build-deps.

Module content and versioning depends on your setup, so I will not provide a

git repository for this case. Please refer to the makedeb-docker and

makedeb-docker-helper scripts in

Travel::Routing::DE::VRR,

Travel::Status::DE::IRIS

and

Travel::Status::DE::VRR

for examples.