Archives: 2010 2011 2012 2013 2014 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025

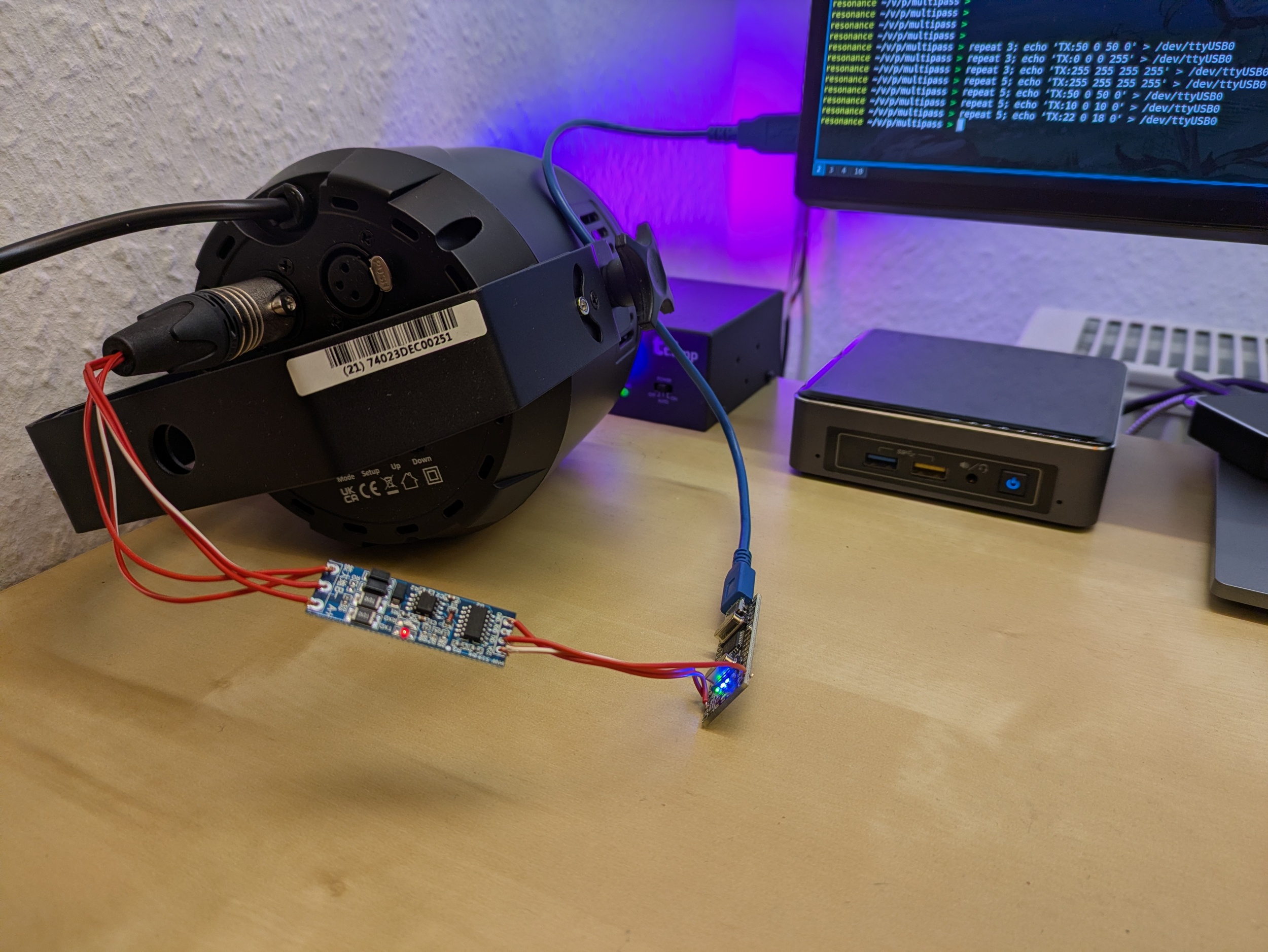

USB → DMX with a single UART (e.g. on an Arduino Nano)

Just in case you're as curious as I was: yes, you can absolutely build a USB to DMX converter that uses a single UART both for receiving ASCII DMX frames via USB (e.g. using an FT232 or CH340G chip) and for sending out DMX frames to a MAX485 or similar. Just not at the same time, but that's what we've got TDMA (time division multiple access) for.

Or, to use a concrete example: yes, an Arduino Nano (ATMega328P with USB-to-serial on-board) can be turned into a USB-to-DMX converter by adding an RS485 adapter and writing a bit of firmware.

The idea is quite simple:

- Every 250 or so milliseconds, disable the UART receive interrupt, configure the UART for DMX output (250 kbaud, 8 data bits, 2 stop bits, no parity), and transmit a DMX frame

- Once done, configure the UART for serial input (e.g., 57600 baud, 8 data bits, 1 stop bit, no parity) and enable the receive interrupt

- Once a complete DMX frame has been received on the USB side, update the DMX output frame accordingly

- Rinse and repeat, making sure not to output any serial data via UART – it's scrictly DMX only

The single drawback is that, while DMX output is running, UART input will be lost – there's no receive interrupt, and even if there was, it would be gibberish due to incompatible UART configuration. However, 57600 baud is not that fast compared to 250 kbaud, so if the PC just transmits its desired DMX frame a few times in quick succession, one of them is bound to be received.

I implemented this in multipass as uart-to-dmx-mega328 and so far it's working reliably. Of course, it's not suitable for anything where you need sub-second scale timing accuracy. But if all you need are some static or slowly fading background hues, it works just fine. In my case, the Neutrik XLR plug used to transmit the DMX signals to a light fixture is probably by far the most expensive item in the entire setup, save for the light fixture itself.

There's two noteworthy aspects:

Path Adjustments in libvirt / qemu

I'm running Home Assistant OS as a virtual machine on my home server. The HASS OS VM image relies on an OVMF file for booting; this file moved to a different path.

So, in order to make Home Assistant boot again after the upgrade to Debian 13, edit /etc/libvirt/qemu/hassos.xml (or similar) and change /usr/share/OVMF/OVMF_CODE.fd to /usr/share/OVMF/OVMF_CODE_4M.fd.

paho.mqtt.client changes

The upgrade from python3-paho-mqtt 1.6.1 to 2.1.0 came with some changes in its API.

In order to make it happy (and not spew any warnings), replace paho.mqtt.client.Client() with paho.mqtt.client.Client(callback_api_version=paho.mqtt.client.CallbackAPIVersion.VERSION2).

Apart from that, everything went smoothly.

Logging Steam Deck Hardware Stats to InfluxDB

I'm a sucker for colourful Grafana graphs (and not-necessarily-useful data logging in general), so naturally, I also had to see if I could get some hardware stats from my Steam Deck in there. Turns out: thanks to Deck's Linux foundation, this is quite easy. All you need to do is enable SSH access (or work directly on the device) and set up a systemd user timer with whatever kind of data logging you want. In my case, I did the following:

- Switch to desktop mode

- Open

konsole(i.e., the terminal app) - Run

passwdto set a password for thedeckuser ssh deck@steamdeckfrom my workstation- Install a script for InfluxDB logging in

~/bin - Install a user service and associated timer in

~/.config/systemd/userto run this script every few minutes - Enable the timer

My script looks as follows:

#!/bin/sh

HOST=steamdeck

exec curl -s -XPOST 'https://[…]/influxdb/write?db=hosts' \

--data "

memory,type=embedded,host=${HOST} $(free -b | awk '($1 == "Mem:") { printf "used_bytes=%d,used_percent=%f", $2 - $7, ($2 - $7) * 100 / $2 }')

$(awk '($1 ~ /^(.*):$/) { gsub(/:/, "", $1); printf "interface,type=embedded,host='${HOST}',name=%s rx_bytes=%d,rx_drop_count=%d,tx_bytes=%d,tx_drop_count=%d\n", $1, $2, $5, $10, $13 }' /proc/net/dev)

$(df -B1 | awk '($1 != "Filesystem") { gsub(/%/, "", $5); printf "filesystem,type=embedded,host='${HOST}',device=\"%s\",mountpoint=\"%s\" total_bytes=%d,used_bytes=%d,available_bytes=%d,used_percent=%d\n", $1, $6, $2, $3, $4, $5 }')

load,type=embedded,host=${HOST} $(awk '{print "avg1=" $1 ",avg5=" $2 ",avg15=" $3}' /proc/loadavg)

users,type=embedded,host=${HOST} $(who | awk '($5 !~ /tmux/) {logins++} END { printf "login_count=%d,session_count=%d", logins, NR }')

battery,type=embedded,host=${HOST},id=1 capacity_percent=$(cat /sys/class/power_supply/BAT1/capacity),cycle_count=$(cat /sys/class/power_supply/BAT1/cycle_count),voltage_uv=$(cat /sys/class/power_supply/BAT1/voltage_now)

$(for i in /sys/class/thermal/thermal_zone*; do test -r $i/type && test -r $i/temp && echo "sensor,type=embedded,host=${HOST},name=$(cat $i/type) mdegc=$(cat $i/temp)"; done)

"

This gives me stats about memory and disk (SSD + µSD) usage, load, and current battery charge and voltage. Unfortunately, power readings do not seem to be available.

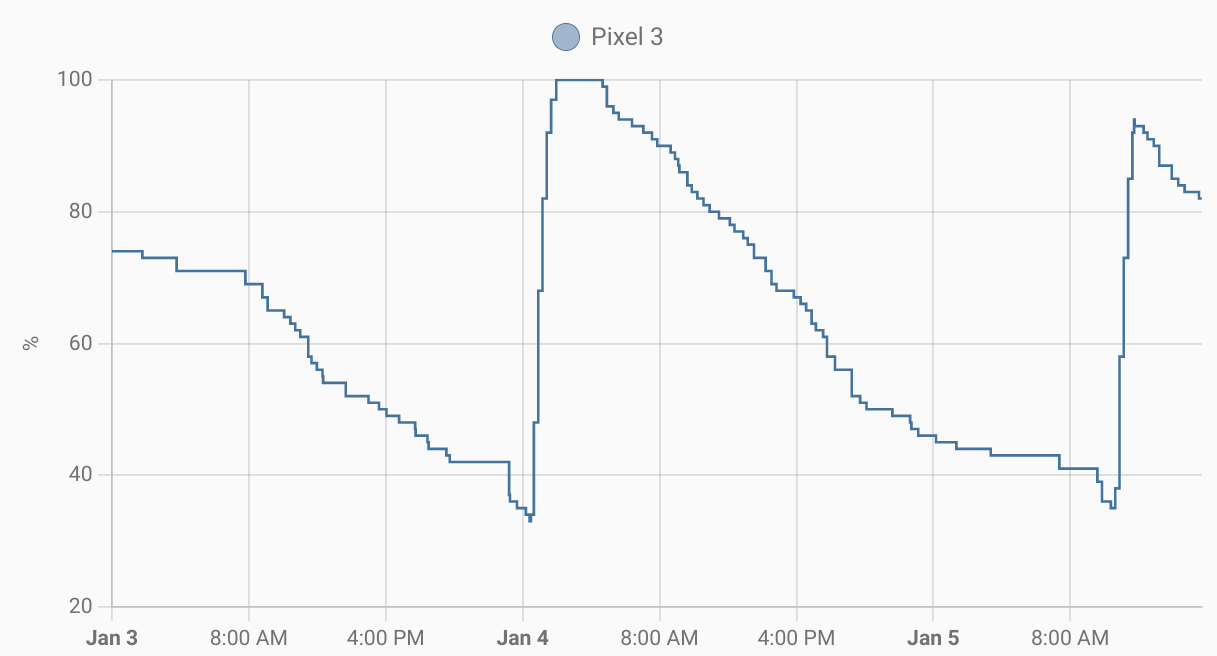

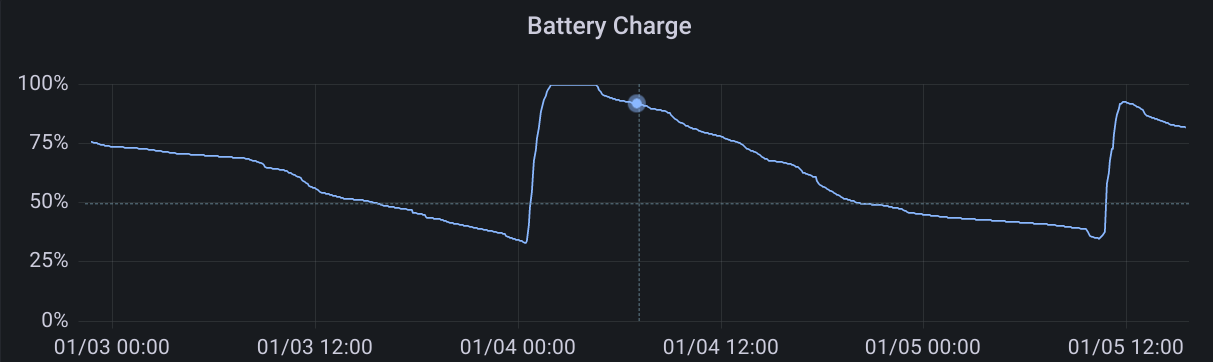

For instance, the following screenshots show an evening DOOM session, followed by leaving the Deck idle to finish some downloads.

They allow for insights such as:

- The Steam Deck typically reaches 80°C when running hardware-intensive games

- Battery drain really depends a lot on what kind of game you're playing

- The Lenovo USB-C Slim Travel Dock I'm using for working at home works just fine with the Steam Deck, but fails to negotiate proper power delivery, so the battery slowly drains when playing.

EOS M50 Eyecup / Viewfinder Repair

Recently, I managed to drop my Canon EOS M50 while changing lenses. The fall wasn't that high (maybe 20cm, give or take), but exerted enough force on the body to dislodge the viewfinder's eyecup. This caused the viewfinder's proximity sensor to always detect something (namely, the eyecup's plastic), which, in turn, meant that the camera would no longer operate its 3" LCD – after all, someone was using the viewfinder, so better turn it off in order to save power.

Trying to shove the eyecup back into place did not work at all, and the fact that Canon does not treat it as user-serviceable or -replaceable did not bode too well. After a few weeks' worth of hesitation, I decided to try disassembling the camera up to the eyecup and repair / re-align it myself. It was long out of warranty, so at least I culd not void anything by trying my luck. There was just the risk of “verschlimmbessern” (making something worse by trying to improve), i.e., breaking the camera entirely when trying to repair it.

So, as usual: only do this if you know what you're doing, and don't blame me if something breaks.

Disassembly

Getting to the eyecup requires removal of nine (black, plastic?) PH0 screws and two parts of the camera's plastic shell. I did not check the screw types thoroughly – they all seemed quite similar, but I still made sure to re-apply each screw to the correct hole just to be on the safe side.

First, remove the microphone and NFC cover on the left. It is held in place by three screws, and can be carefully lifted out once they are loose. There are no plastic clips or anything, so you should not need to apply force.

Then, flip out the 3" LCD so that it is out of the way and reveals two screws below the viewfinder. Remove these, the two screws at the tripod mount, and the two screws on the right side. The plastic part that you can now remove holds control button and is connected to the camera with a flat flex cable right below the control buttons. So, carefully fold out the plastic back (including the control buttons), and tilt it to the right to avoid stressing said cable. You only need to rotate it by about 90° in order to expose the final (tenth) screw securing the eyecup. You can choose between disconnecting it and storing it somewhere safe, or leaving it connected and being extra careful not to break it.

Repair

Now, remove the metal screw holding the eyecup. In my case, once the screw was out, I could easily move the eyecup back into place (without having to exert force) and was already done with the repair. I expect that you can also replace the entire eyecup at this point, but I did not try that.

Re-Assembly

- Secure the eyecup with the metal screw

- Reconnect the flat flex cable leading to the control buttons on the back cover

- Snap the back cover back into place. Do not secure it yet.

- Turn on the camera and verify that eyecup / viewfinder, 3" LCD, and the control buttons on the back (next to the LCD) are working

- Turn the camera off

- Secure the back cover with its six black screws

- Snap the side cover back into place

- Secure the side cover with its thre black screws

In my case, I did not verify that the control buttons are working before re-applying all screws. While I had not unplugged the flat flex cable, I had still managed to loosen its connector, so I had to go back and re-connected it in order to have a working camera again. Before proceeding with the re-assembly, the connector should look roughly like this:

Apart from that, this was a pleasantly straightforward repair, and definitely better than just not using the LCD anymore.

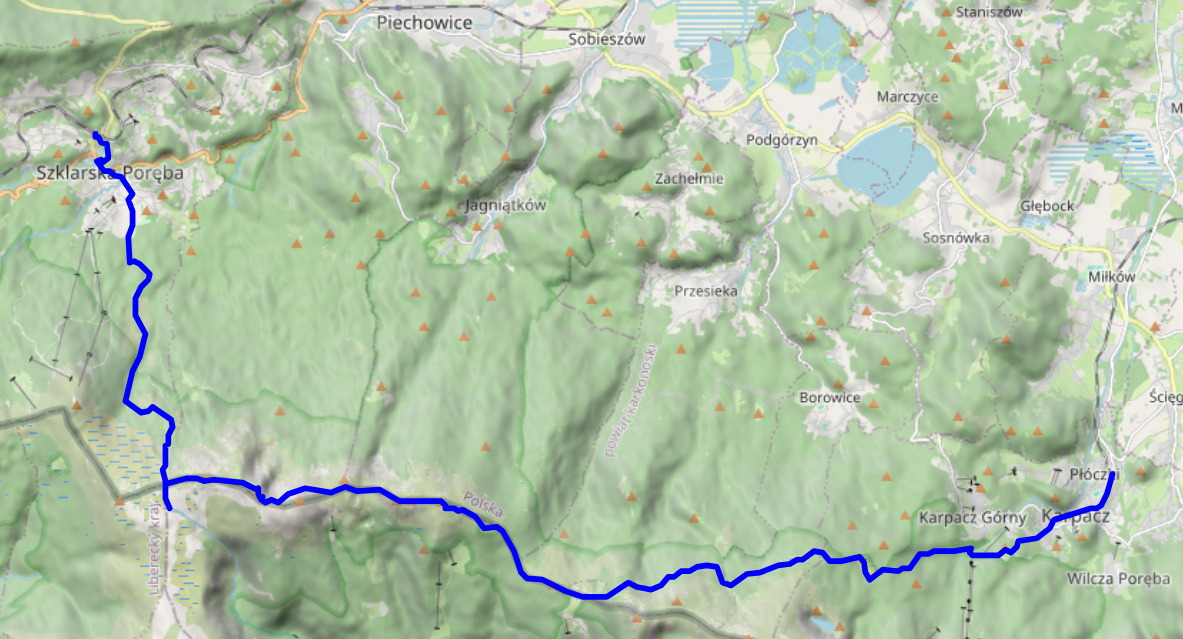

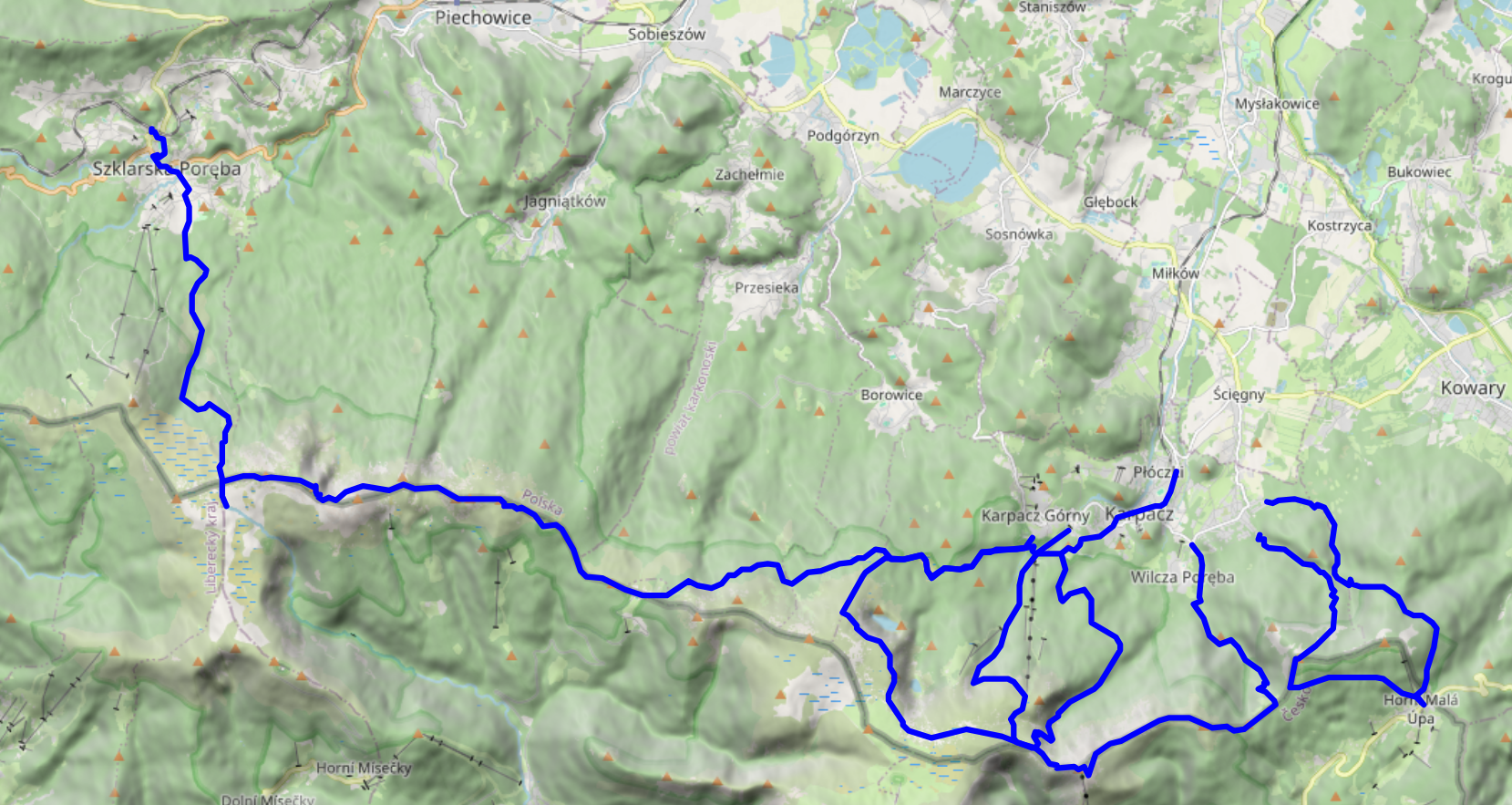

On the fourth and final full day of our vacation in the Giant Mountains, we decided to go for a change of scenery: take the train to Szklarska Poreba (Schreiberhau), walk up to Pramen Labe (Elbquelle / spring of the Elbe river), and then, depending on time of day and our appetite for more, either take the train back to Karpacz or walk back there across the peaks of the Giant Mountains. Unsurprisingly, we ended up walking the 20km or so from Pramen Labe back to Karpacz, and I can only say that that was the best decision we could have made.

- KD D62 Karpacz (09:00) → Jelenia Góra (09:18)

- KD D6 Jelenia Góra (09:24) → Szklarska Poreba Górna (10:15)

Pramen Labe

Szklarska Poreba is a comfortable little town, and seems like less of a tourist attraction than Karpacz. Only downside: it's located in a valley, with the train station where we got off sitting on the hills on one side and the Giant Mountains on the other. So, we first had to lose some precious elevation only to climb back up again on the other side. Apart from that, the way up went as usual: it started out relatively smooth and even, and then got steep and stony. There were some more stone formations and water streams, but nothing out of the ordinary.

Pramen Labe was pretty crowded, so we didn't stay for too long (13:30 to 14:00 or so). The spring itself is your typical ordinary spring, augmented with a nice list of some of the towns and cities it passes before it finally flows into the North Sea. It's located on a relatively large plateau, so from the spring itself, you don't have that much of a view into the surrounding mountain- or countryside.

The Ridge

The first peak we passed was Violik / Łabski Szczyt (Veilchenstein / violet peak). Apparently, its granite rock is slowly ground down by freezing water, leading to its slightly unnatural look as if someone had used a large shovel to pile up a heap of stones. It was also home to some prime specimen of some kind of moss, possibly Trentepohlia iolithus (Veilchenmoos) or Psilolechia lucida (Schwefelflechte).

Up next were Vysoká pláň (high plain), a small peak located on a wide plateau that sits at about 1,400 metres above sea level, and the neighbouring Śnieżne Kotły (Schneegruben / snow pits). Vysoká pláň features the former Schronisko „Nad Śnieżnymi Kotłami” (Schneegrubenbaude / shelter “above the snow pits”), which used to offer rest to hikers and mountain climbers. These days, it is instead used as a radio tower: Radiowo-telewizyjny Ośrodek Nadawczy Śnieżne Kotły. Śnieżne Kotły tend to be a repository for not-yet-molten snow late into spring, but were absolutely devoid of it when we passed them in early August. In any case, the view from the upper side of the cliff was quite spectacular. We spent a few minutes admiring the views, and continued at about 15:00.

We continued around Vysoké kolo (Hohes Rad / high wheel), whose peak consists entirely of broken granite, towards Špindlerova bouda (Spindlerbaude / Špindler's hut), a former shelter that now serves as a hotel.

The peak of vysoké kolo really is quite impressive – I only have pictures of the hiking path around it; see the Wikipedia links for more. Some of the rocks on the path are loose and there are no handrails or anything – do be careful where you step.

The remainder of the way towards Špindlerova bouda provided more granite heaps, mountain pines, and other kinds of rock formations. Also, Špindlerova bouda itself actually features a bus stop, located at convenient 1,198 metres above sea level.

Descent

From here on, continuing the path along the peaks would have taken us to Słonecznik, which we had already visited two days ago. Instead, we descended to the north. We had actually planned to make our descent before Špindlerova bouda, however, it turned out that that path was closed to hikers.

At this point, the clock was reading 17:00, we still had quite a few kilometres left to go, and the shadows were already growing longer. We had the sun at our backs and got some nice views across the Polish lowlands, plus the usual selection of rocks, more or less forested areas, and marsh lands. We reached Polana - Kotki (Katzenschlosslichtung / cat castle glade) at 18:45, and got back to Karpacz at around 20:15.

See lib.finalrewind.org/Pramen Labe for more pictures.

Krkonoše day 3: Malá Úpa and Tabule

This is the third of four posts about our 2025 vacation in the Giant Mountains (Krkonoše / Karkonosze), see Vacation in the Giant Mountaints for an introduction.

We wanted to have a change from the typical Polish food in the evening, and, thus, decided to climb up to Horní Malá Úpa on the Czech side of the Giant Mountains, have lunch there, walk a bit along the ridge, and return to Karpacz.

Ascent, Part 1

We started at around 10:30 from the very West of Karpacz, specifically its Osiedle Skalne suburb. From there, we took a path along (and, as often as not, across) the Malina stream (Langwasserbach). Some crossings came with bridges, others with broken bridges, and some with just a few strategically placed rocks. The latter parts of the path are helpfully marked with exclamation marks, likely indicating precisely these challenges. Anyhow, we enjoyed the quiet we had in this part of the forest, and made it through all fords without surprise baths.

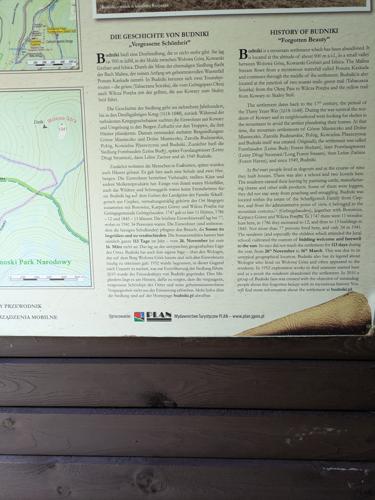

Budniki

At 12:00, about a third of the way up, we reached former Budniki (Forstbauden / Forstlangwasser). We weren't aware of this site when planning the trip, so that was a pleasant surprise. Essentially, Budniki is a former settlement that, due to its location very close to the peaks of the Giant Mountains, had the peculiarity of not receiving any direct sunlight for 110 days a year. Apparently, it was abandoned around 1950 due to preparations / explorations for uranium mining operations. We also learnt about Wołogór, which doesn't seem to have an English or German Wikipedia page that I can link. Furries, they're everywhere!

We also found out that the bread we had brought along was not a proper dark bread, but simply wheat with activated charcoal. That's the kind of surprises you can get when you aren't fluent in Polish.

Ascent, Part 2

From Budniki on, the remaining 400-or-so metres of elevation got quite challenging, with uneven and pretty steep terrain that just kept going on and on. When we finally reached a more even path close to the ridge line, we were greeted with the usual occasional flooding, lush green, and (at about 14:00) a sign welcoming us to Česká Republika.

Horní Malá Úpa

For English speakers, the jokes related to the name of Horní Malá Úpa (Ober-Kleinaupa / upper Malá Úpa) write themselves. So naturally, we had to pose for the obligatory photos near the entrance sign. Apart from that, the municipality is unspectacular. There's some border stones that still bear markings of the former ČSSR (Tschechoslowakei), some skiing infrastructure, and buses that are specifically outfitted for taking on bikes (or, possibly, skis). We had lunch at Pivovar Trautenberk, which I can recommend. Also, the nice part about Česká is that if you order lemonade, there's a high likelihood that you'll be served kofola 😋.

Tabule

After some exploration and our lunch break, we left Horní Malá Úpa for the final climb up the nearest peak at around 15:30. We started out in a dense forest, and then, as the vegetation grew thinner, got some views towards other parts of the Giant Mountains.

We reached Tabule (Tafelstein / table peak) at about 17:00, and took in some more scenery. Once again, we could spot Sněžka in the distance, and also a little bit of Karpacz and the Polish lowlands.

Descent

The descent took us slightly west of the path we used for the ascent, and was just as steep. It also really drove home just how much its height (and, likely, other factors) affect the vegetation – we got everything from low bushes to coniferous and birch forests.

We passed Budniki once more, and then followed the Skałka stream for a bit. Here, we were faced with another surprise: a (possibly carbonated) spring that not even OpenStreetMap knew about.

We got back to Karpacz at about 19:00. As usual, more photos are available at lib.finalrewind.org/Tabule 2025.

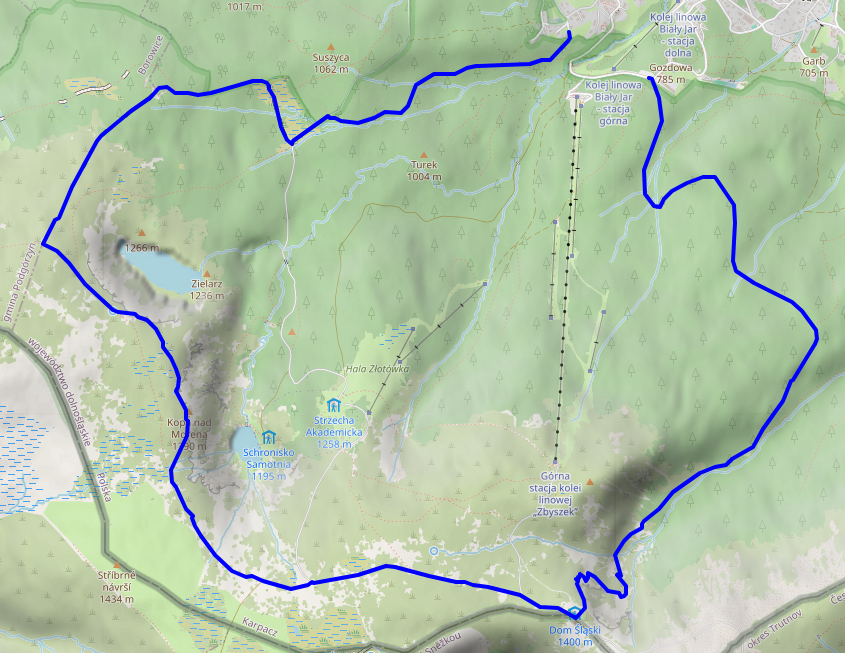

Krkonoše day 2: Słonecznik: Wetlands, Stones, and Ponds

This is the second of four posts about our 2025 vacation in the Giant Mountains (Krkonoše / Karkonosze), see Vacation in the Giant Mountaints for an introduction.

On this day, we decided to make our ascent more to the east, take a look at the wetland we'd find there, and then see where our intuition took us.

Ascent

We started at around 11:00 from Karpacz Górny (“upper Karpacz”) and followed a path along the Pląsawa stream towards the wetland near Polana - Kotki (Katzenschlosslichtung / cat castle glade). The path along the stream is steep, but relatively even, and the stream provides a nice background soundtrack to the ascent. Right before Polana - Kotki, we merged with the (decidedly more crowded) main track towards Sněžka, and passed a KPN employee who glanced at my smartphone to verify that we did indeed have KPN tickets. Luckily, most of the crowd continued to Sněžka, whereas we left the track to the east, right into a small patch of wetland.

Wetlands

It's a wet, grassy and relatively plain area that seems to be fed exclusively by ground water. I don't quite know whether it's a fen, bog, or marsh, but probably something along those lines. There's the usual elevated wooden walkways to help you traverse it, and already some nice views across the mountainside. We also found a lizard who was enjoying the sun :)

Stones

We followed the path towards the ridge of the mountain range, and passed quite a few notable granite structures. The path itself also grew more rocky and uneven, interleaved with wooden walkways that were decidedly in disrepair, featuring frequent occurences of broken or missing planks. It's a good idea to look where you're going, and not just take in the (gorgeous as usual) nature around you.

The first stone formation we passed were the Pielgrzymy (Pilgersteine / pilgrim's stones), with views towards Koci Zamek (Katzenstein / cat rock) below and Słonecznik (Mittagsstein / sunflower or noon rock, depending on whether you translate from Polish or German) above. One of them is apparently open for climbing, and if you do find a reasonably safe path up one of its plateaus, you are rewarded with a pretty nice view.

Going up further, we reached Słonecznik at around 15:00, but not before enjoying some more views down towards the valley. As Słonecznik itself was a bit crowded (though far less than Sněžka on the day before), I don't have any photos of it – see the Wikipedia link above for two samples. Just like Sněžka peak, it's also a good spot for some radio range tests, so we stayed for a bit.

Ponds

Słonecznik is also where the path up the ridge terminates, and you get to decide whether you want to take on the summits to the east or to the west. We opted for the east, which would lead us back towards Sněžka and Karpacz. As on the day before, the local groundwater frequently found its way onto the path. Apart from that, the path was relatively even, relatively easy terrain, and not too crowded. This seems to be a rather tame segment of the Krkonoše ridge line.

Before too long, we ended up above Wielki Staw (Großer Teich / large pond) and, a bit later, Mały Staw (Kleiner Teich / small pond). The water was quite calm, clear, and full of rocks that had fallen into it over time.

Descent

We decided to make our descent at Dom Śląski (Silesian house), a tad eastwards of the path we used for our ascent on the day before, hoping that it'd be less crowded. But before that, we got yet another view of Sněžka, which also seemed slightly less crowded than the day before – possibly because it was already quite late in the day (around 16:30), and the shadows were already growing longer by the minute.

Our descent took us from Dom Śląski down into the Kocioł Łomniczki (Melzergrund) valley, right along the Główny Szlak Sudecki (Sudeten-Hauptwanderweg / Main Sudetes Trail). Kocioł Łomniczki valley is a former glacier site, and as such the path starts out very steep and rocky.

It also features a memorial / symbolic graveyard for all those who passed away on the mountain. You may decide to hum the tune of the Celeste – Resurrections soundtrack at this point.

In any case, once you've made it past the steep part, you're rewarded with a chance to cool off your feet in the Łomniczka stream, and easy walking for the remaining hour or so to Karpacz.

As usual, our trip ended at around 19:00, and more photos are available at lib.finalrewind.org/Słonecznik 2025.

Krkonoše day 1: Sněžka (snow top) to Soví sedlo (owl pass)

This is the first of four posts about our 2025 vacation in the Giant Mountains (Krkonoše / Karkonosze), see Vacation in the Giant Mountaints for an introduction.

On our first full day, we decided to start with the most obvious target: Sněžka (snow top / Schneekoppe), the highest summit of the Giant Mountains (and also of the Sudetes mountain range and of the Czech Republic).

Starting near Karpacz train station (600m) at about 09:00, we took the Śląska Droga path from the outskirts of Karpacz (800m) to Biały Jar (1225m), which we reached at about 11:00. It's steep, but easy terrain, and seems to be the main tourist trail from Karpacz up to Sněžka: it wasn't exactly packed, but definitely crowded. As neither of us hike up mountains every day, we made frequent breaks along the way – there aren't too many (free) seats or benches, but plenty of stones that can serve as seats when needed.

Up next is a relatively even segment via the Zbyszek's upper aerial way station and Dom Śląski (Silesian house); we got there at about 12:15. The path already offers some nice views down into the Polish lowlands and towards Sněžka, and also seems to be quite popular. It's close to the timber line, and you can already see the vegetation getting smaller and more compact.

Finally, we took a segment of Droga Przyjaźni Polsko-Czeskiej (the Polish-Czech friendship trail) for the final serpentine climb up Sněžka (1603m). This is a pretty steep and stony one-way path; alternatively, Droga Jubileuszowa (Jubilee road) offers a less steep way up through better terrain. Droga Przyjaźni Polsko-Czeskiej definitely has the better views – you get to look into an unnamed and unpopulated Czech valley and can also see the next range of the Giant Mountains and the Czech lowlands behind it, as well as a plateau of the Giant Mountains to the west.

Sněžka itself was pretty crowded, but a good spot for radio experiments, so we stayed there from about 13:00 to 13:50. The views are nice, but the paths around it are more interesting. The Sněžka peak sits comfortably above the timber line, so there is hardly anything growing there at all.

We quickly scrapped our original plan of returning via Śląska Droga, and instead chose to follow Droga Przyjaźni Polsko-Czeskiej to the east until we'd find the next path down to Karpacz at Soví sedlo / Sowia Przełęcz (owl pass / Eulenpass). This turned out to be an excellent idea – the path was far less busy, and really drove home just how much a few dozen metres of ascent or descent affect the height of the vegetation next to the path. As the trail largely follows the Czech–Polish border, you'll frequently pass border stones.

Also, the views back to Sněžka and to either side are, unsurprisingly, very good.

We reached Soví sedlo / Sowia Przełęcz (owl pass / Eulenpass) at about 15:20. The way back to Karpacz took us through a valley next to the Płomnica (Plagnitz) stream and was easily as steep as the ascent towards Biały Jar and Sněžka, just with a bit more serpentine segments and less even surfaces. There's lots of fruit growing in the upper parts of the path, and plenty of water (including on the trail itself) further down.

We returned to our room near Karpacz train station at about 18:30. More photos are available at lib.finalrewind.org/Sněžka 2025.

In early August 2025, dm2lct and I spent a few days in the Giant Mountains (Krkonoše / Karkonosze) at the Czech/Polish border. Specifically, we booked a room in Karpacz, a town at the foot of the Sněžka, which happens to be the highest summit of the Giant Mountains (and also of the Sudetes mountain range and of the Czech Republic).

The Giant Mountains are absolutely worth a visit – we've been there for four days and I feel like we've seen quite a lot, but certainly not all of the diverse landscapes and views they have to offer. Just note that, unless you want to take one of the few lifts scattered throughout the mountain range, you'll have to climb 700 to 1,000 metres each day depending on where you are staying and where you are going.

I'll write separate blog post for each of the four days (i.e., for each of the four summits / parts of the mountain range that we visited during our stay). This one just serves as a documentation of arrival, departure, and some notes on Karpacz and the Karkonosze National Park (Karkonoski Park Narodowy / KPN) in general.

Arrival

There is a connection from Dresden to Karpacz every other hour, and the entire trip takes about four hours when everything goes well. In our case, we started with a delayed bus to Bautzen, thus leading to a far longer layover in Görlitz than anticipated. Görlitz is absolutely worth a visit though, so no harm done.

- Trilex RE1 Bautzen (11:16) → Görlitz (11:50)

- KD D19 Görlitz (13:39) → Jelenia Góra (15:15)

- KD D62 Jelenia Góra (15:32) → Karpacz (15:56)

Karpacz

Karpacz looks like a pretty typical Polish tourist town. It offers plenty of hotels, pensions, and more simplistic renting options, and also plenty of restaurants and kitsch. There are two supermarkets close to the train station, and a few more scattered throughout the remainder of the city. Note that most restaurants close between 20:00 and 22:00, so you should not wait too long in case you still want to have a warm meal after a day of hiking up and down the mountains.

On the language side, learning some Polish beforehand is a good idea. In our case, some remnants of school Polish and a dictionary on the smartphone were sufficient most of the time. At least a few people also speak at least a little bit of English; we didn't try German.

Karkonoski Park Narodowy (KPN)

The entire Polish side of the giant mountains is covered by a national park, entry to which costs 8 to 11 Złoty (about 2 €) per day and person, depending on whether you buy a single-, two-, or three-day pass. Especially on the popular tourist trails, you will find staff selling tickets at one of the entrances to the park – of course, you can pay by card, even at 1,000m above sea level. You also have the option of buying day passes online and then presenting those on your smartphone or as a print-out. The trails inside the park are mostly well-kept and seem like the upkeep isn't that cheap, so overall, I'd say the fee is justified.

We did not encounter any ticket checks inside the park or on the way out, and there were also no ticket checks (or sales) on the ridges and peaks, which frequently criss-cross between Poland and the Czech Republic. So, if you're entering the Giant Mountains from the Czech side, you probably don't need a ticket even if you enter KPN area every now and then.

Departure

Due to several Nazi demos in Bautzen, we were not too keen on taking Trilex RE1 on the way back. As the line to Tanvald / Liberec was closed as well, we instead opted for the long way round: Karpacz → Sędzisław → Trutnov → Praha → Děčin → Bad Schandau → Dresden. This took the better part of the day and was absolutely worth it.

- KD D62 Karpacz (06:52) → Jelenia Góra (07:15)

- KD D6 Jelenia Góra (07:22) → Sędzisław (07:49)

- KD D26 / Os 25425 Sędzisław (07:58) → Trutnov střed (08:45)

- ČD R10 “Krkonoše” Trutnov střed (08:47) → Praha hlavní nádraží (11:42)

- ČD R20 “Labe” Praha hlavní nádraží (14:45) → Děčin (16:33)

- RB U28 Děčin (16:41) → Bad Schandau (17:11)

- S1 Bad Schandau (17:14) → Dresden Hbf (17:58)

The whole endeavour cost us about 60 € (about 30€ per person):

- Karpacz → Jelenia Góra: – (vending machine was broken and the conductor didn't know how to sell cross-country tickets, so we got to travel for free)

- Jelenia Góra → Královec: 40 PLN (about 10€)

- Královec → Trutnov: 102 CZK (4.20 €)

- Trutnov → Děčin: 999 CZK (41.13 €)

- Děčin → Schöna: 80 CZK (about 4 €)

- Schöna → Dresden: Deutschlandticket

Also, I'd like to note that the official České Dráhy app (Můj vlak) is easily the best official railway app I've ever used. It has detailed and up-to-date information about trains, free/reserved seats, delays / disruptions / construction sites, and anything else you need. Also, despite booking a conventional day ticket for two adults that was not even bound to specific trains, we were able to reserve seats on R10 and R20 free of charge. Rail travel in Poland doesn't even come close.

Sędzisław → Trutnov

We did not look up the rolling stock beforehand, and were positively surprised when we encountered a ČSD M 152.0 (“Brotbüchse”) on the cross-border line from Poland to the Czech Republic. It's a single-carriage Diesel train, using a conventional omnibus motor, and was pretty empty – at least on the early Sunday morning at which we were travelling. It's not exactly modern or fast, but does its job and got us to Trutnov almost in time. From a nostalgia point of view, this was quite the jackpot.

Luckily, the layover in Trutnov střed happens before a single-track segment, with the train from Sędzisław coming from the segment that the R10 to Praha has to pass after departure. hence, although our train from Poland had two minutes of delay, the R10 was waiting for us on the same platform and we could still board it. I have no idea whether this connection always works that well.

Praha → Děčin

Our ticket would have allowed us to take a EuroCity, but dm2lct specifically suggested the R10 line. As of 2025, its rolling stock still includes a luggage wagon that contians four 8-person compartments – and features windows that you can actually open. You hardly ever see that anymore these days, so taking this train on the way back also was quite a treat. The noise at an open window can be deafening at times, though – both the wheels and the brakes are anything but quiet, especially when walls or rocks next to the tracks reflect their noise back towards the train.

Enabling Filesystem Quotas on Debian

Because I keep forgetting how I do it, here's how to enable filesystem quotas on a reasonably recent Debian server.

For simplicity, let's say the mountpoint is /home and the device /dev/storage/home.

sudo apt install quota

- Add

usrquotaas a mount option to the/etc/fstabfor/home

sudo systemctl daemon-reload

sudo umount /home

sudo tune2fs -O quota /dev/storage/home

sudo mount /home

For usrquota alone, a remount should be sufficient, but tune2fs needs to run offline in this case.

Now, all that's left is setting quotas.

This only needs to be run once for each new account, preferable automatically as part of some kind of account management system.

setquota -u account block-soft block-hard inode-soft inode-hard /home

You can check quota settings and usage with repquota -as.

Installing Debian on a ThinkPad T14s Gen 6 with Intel CPU

When setting up my new work laptop (replacing the more than eight years old X270 that I used before), I found that the freshly installed Debian unstable was unable to produce any kind of graphics output. X11 complained about not finding any screens, and the text console also broke as soon as the kernel tried configuring it. Long story short: Turns out that Linux 6.12, as shipped with Debian unstable, is simply too old for Intel Arc 130V/140V graphics and/or Intel Lunar Lake / Core 258V CPUs. With Linux 6.14, everything works right out of the box.

Luckily, you can just install Ubuntu kernels on Debian. So, solving this issue was as simple as issuing the following commands:

wget \

https://kernel.ubuntu.com/mainline/v6.14/amd64/linux-headers-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb \

https://kernel.ubuntu.com/mainline/v6.14/amd64/linux-headers-6.14.0-061400_6.14.0-061400.202503241442_all.deb \

https://kernel.ubuntu.com/mainline/v6.14/amd64/linux-image-unsigned-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb \

https://kernel.ubuntu.com/mainline/v6.14/amd64/linux-modules-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb

sudo apt install \

./linux-headers-6.14.0-061400_6.14.0-061400.202503241442_all.deb \

./linux-headers-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb \

./linux-modules-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb \

./linux-image-unsigned-6.14.0-061400-generic_6.14.0-061400.202503241442_amd64.deb

The new kernel version is automatically picked up by GRUB, so one reboot later everything worked as intended.

In late May 2025, dm2lct and I hiked across the Jizera mountains (CZ: Góry Izerskie / PL: Jizerské hory / DE: Isergebirge), right at the Czech – Polish border. It's a very nice hike with some stunning views, and doable as a single-day trip from and back to Dresden – even with rail replacement services here and there. Just note that you might want to wear water-tight boots – at least when we were up there, the majority of hiking trails on the summit were actually water streams.

See jizera-mountains.gpx for the approximate route we took. Note that I traced it after the fact, as we did not use GPS logs on our hike.

Arrival

We decided to start our climb from Świeradów-Zdrój (DE: Bad Flinsberg) on the Polish side of the mountains. We did have to get up at a slightly ungodly hour, but that was definitely worth it.

- TL RB60 Dresden Hbf (06:53) → Görlitz Hbf (08:33) – Deutschlandticket

As the direct connection to Zgorzelec was closed, apparently due to issues related to the German/Polish language level of the train drivers, the route to Świeradów-Zdrój included a nice little 50-minute detour through the twin towns of Görlitz and Zgorzelec. I think I'll come back for a proper visit to Görlitz old town on a later day. It even has a small tram network! Zgorzelec train station is quite cozy as well, featuring a small café right next to the waiting room.

- KD 69874 Zgorzelec (09:44) → Świeradów-Zdrój (10:55) – 18.75 PLN (4.45 €) each

The tracks in Poland had very noticeable rail joints – and a tight curve in Lubań actually mannaged to topple our water bottle. Apart from that, it's a pretty standard Diesel train connection, ending at the single-platform terminus in Świeradów-Zdrój.

Going Up

Świeradów-Zdrój sits about 500 metres above sea level; the Jizera and Smrk peaks clock in at 1,107 and 1,124 metres, respectively. There's a cabin lift to Stóg Izerskie (DE: Heufuder) situated about a kilometre from the train station, seemingly operating year-round, but walking certainly is the more interesting option.

After a few hundred metres through the town itself, you're faced with two options: a (partially serpentine) road, or a more direct (and, thus, steep) gravel-rock-ish path. We chose the latter option, which seems to be marked with a red-on-white stripe.

The path was mostly dry, except for a short segment near the Świeradówka reservoir that basically consisted of walking through a sometimes stony and sometimes just outright muddy water stream. That segment is not mandatory: an asphalt road offers the same way up with a slight (but dry) detour.

Apart from that, the way up already offers some very nice views into the valley – you just need to turn around and look back every now and then. The views include a wooden obseravtion tower sitting almost in the valley itself. I have no idea why it's there.

Lunch Break

Once atop Stóg Izerskie (DE: Heufuder), you can stop for lunch at Schronisko na Stogu Izerskim (DE: Heufuderbaude), a hostel/restaurant thingy offering typical Polish food such as Pierogi (a kind of dumplings). Otherwise, you can still go there and enjoy the views from the terrace.

Across the Ridge

Our next stop was Smrk (PL: Smrek / DE: Tafelfichte). It's three metres lower than the highest peak of the Jizera Mountains (namely, Wysoka Kopa / DE: Hinterberg), but features a nice observation deck.

The way to Smrk is comparatively even, but full of water streams that double as trails. It's pretty; just try not to get wet feet. It also features a border crossing from Poland to the Czech republic. In our case, the “welcome to Poland” sign on the way back was missing, but the border stone itself was still present.

View

It's pretty cold and drafty on the observation tower, but the views are absolutely worth it.

Going Down

While our original plan was to return to Świeradów-Zdrój, we spontaneously decided that Nové Město pod Smrkem on the Czech side should give for a more interesting trip. It was a bit of a close call – the last train to Liberec departed around 17:00, and there was just a single fallback connection near 19:00 that might still have taken us home in case we missed the one to Liberec. Note that this was regular weekday service.

From the observation tower, you can follow paths marked with a blue-on-white stripe for a reasonably direct, steep, and as often as not watery path down. The views alongside it do not disappoint at all, and even down in the valley there's still quite an angle to the nature around you.

Departure

We arrived in Nové Město pod Smrkem at 16:45, 15 minutes before the last train to Liberec departed. Normally, there is a connection from Liberec to Zittau (DE) that would've taken us directly back to Dresden, but as there was only rail replacement bus service available we went with the slightly longer, but at least equally scenic route via Děčín – featuring a nice view towards Ještěd (DE: Jeschken) and its iconic tower. Even on the train, you really notice that the Czech Republic is in more of a mountainside than conventional countryside situation. Also, the ARV train used OpenStreetMap on its passenger information display, which is cool.

- Os 6381 Nové Město pod Smrkem (17:01) → Liberec (17:57) – 70 CZK (2.83 €) each

- ARV 1334 Liberec (18:28) → Děčín hl.n. (20:10) – 196 CZK (7.92 €) each

- RB U28 Děčín hl.n. (20:41) → Bad Schandau (21:09) – 1.80 € or so each

- S1 Bad Schandau (21:44) → Dresden Hbf (22:28) – Deutschlandticket

All pictures from the trip are available on lib.finalrewind.org/Góry Izerskie 2025.

Using cryptsetup / LUKS2 on SSHFS images

Occasionally, I need to open remote LUKS2 images (i.e., files) that I access via SSHFS. This used to work just fine: mount an sshfs, run cryptsetup luksOpen and access the underlying filesystem. However, a recent cryptsetup upgrade introduced (or changed?) its locking mechanism. Now, before opening an image file, it tries to aqcuire a read lock, which will fail with ENOSYS (Function not implemented) on sshfs mountpoints. This, in turn, causes cryptsetup to report "Failed to acquire read lock on device" and "Device ... is not a valid LUKS device.".

There doesn't seem to be a simple way of disabling this (admittedly, in 99% of cases desirable) feature, so for now I'm working around it by just having flock always return success, thanks to the magic of LD_PRELOAD and a flock stub:

#include <sys/file.h>

int flock(int fd, int operation)

{

return 0;

}

Compile as follows:

> ${CC} -O2 -Wall -fPIC -c -o ignoreflock.o ignoreflock.c

> ${CC} -fPIC -O2 -Wall -shared -Wl,-soname,ignoreflock.so.0 -o ignoreflock.so.0 ignoreflock.o -ldl

And then call LD_PRELOAD=..../ignoreflock.so.0 cryptsetup luksOpen ...

(or sudo env LD_PRELOAD=..../ignoreflock.so.0 cryptsetup luksOpen ...).

ignoreflock provides a handy stub, Makefile and wrapper script for this.

Stop IDs in EFA APIs

EFA-based local transit APIs have two kinds of stop identifiers: numbers such

as 20009289 and codes such as de:05113:9289.

travelynx needs a single type of ID, and this post is

meant mostly for myself to find out which ID is most useful. The numeric one

would of course be ideal, as travelynx already uses numeric IDs in its stations

table.

Numbers

DM_REQUEST: Available as stopID in each departureList entry.

STOPSEQCOORD_REQUEST: Available as parent.properties.stopId in each locationSequence entry.

STOPFINDER_REQUEST: Available as stateless and ref.id.

COORD_REQUEST: missing.

Codes

DM_REQUEST: missing.

STOPSEQCOORD_REQUEST: Available as parent.id in each locationSequence entry.

Outside of Germany, the format changes, e.g. placeID:27006983:1 for a border

marker (not an actual stop) and NL:S:vl for Venlo Bf.

STOPFINDER_REQUEST: Available as ref.gid.

COORD_REQUEST: Available as id and properties.STOP_GLOBAL_ID in each locations entry.

API Input

DM_REQUEST: Accepts stop names, numbers, and codesSTOPSEQCOORD_REQUEST: Accepts stop numbers and codes

Examples

- LinzAG Ebelsberg Bahnhof: 60500470 / at:44:41121

- LinzAG Linz/Donau Hillerstraße: 60500450 / at:44:41171

- NWL Essen Hbf: 20009289 / de:05113:9289

- NWL Münster Hbf: 24041000 / de:05515:41000

- NWL Osnabrück Hbf: 28218059 / de:03404:71612

- VRR Essen Hbf: 20009289 / de:05113:9289

- VRR Nettetal Kaldenkirchen Bf: 20023754 / de:05166:23754

- VRR Venlo Bf: 21009676 / NL:S:vl

Conclusion

Looks like numeric stop IDs are sufficient.

They're missing from the COORD_REQUEST endpoint, but that's not a dealbreaker -- all database-related operations work with DM_REQUEST and STOPSEQCOORD_REQUEST data.

Also, there seems to be no universal mapping between the two types of stop IDs.

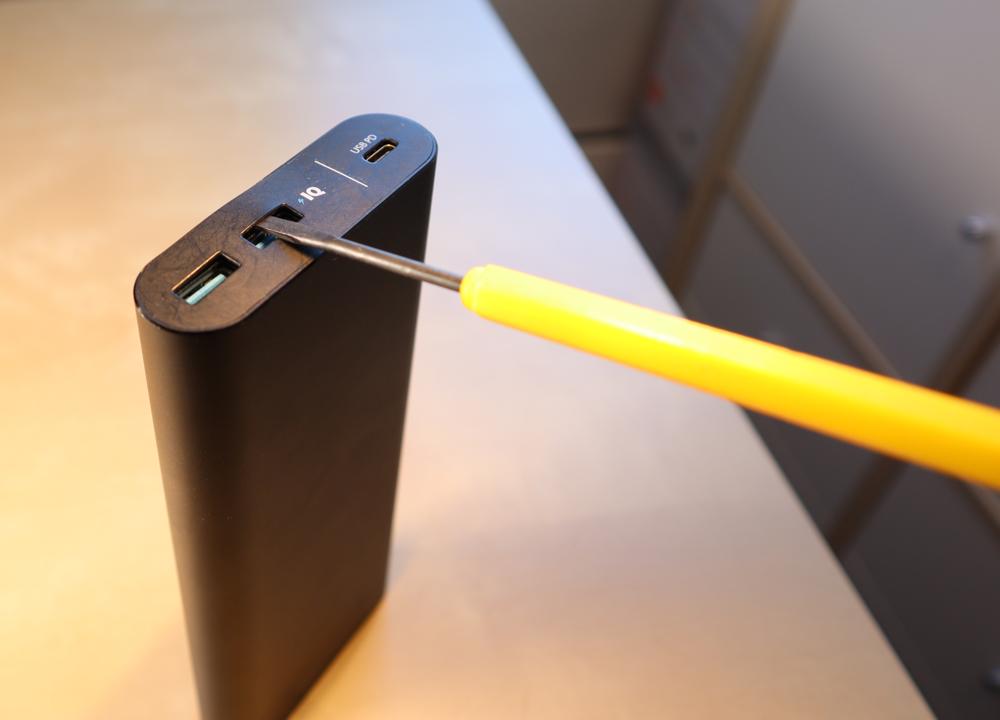

PowerCore 5000 Teardown and Repair

I usually carry a PowerCore 5000 powerbank with an attached USB-A to USB-C cable in my purse. In hindsight, always having the cable attached to it (rather than just when using it) is not the best idea, as it exercises quite some leverage forces on the USB port. So, to little surprise, at some point the output port stopped working unless the cable connected to it was pressed into the right direction.

Luckily, the powerbank is quite easy to open up, repair, and re-assemble.

Caution: This powerbank contains a 18.5 Wh LiIon cell. In normal operation, the (dis)charge PCB is in charge of battery management tasks like short circuit protection. Disassembly exposes the raw 26650 LiIon cell, which likely does not contain a built-in protection circuit. Puncturing, shorting, heating, or otherwise mishandling it can lead to fire and/or explosion. Don't disassemble a powerbank unless you know what you are doing. Don't work with soldering irons close to LiIon cells unless you really know what you are doing.

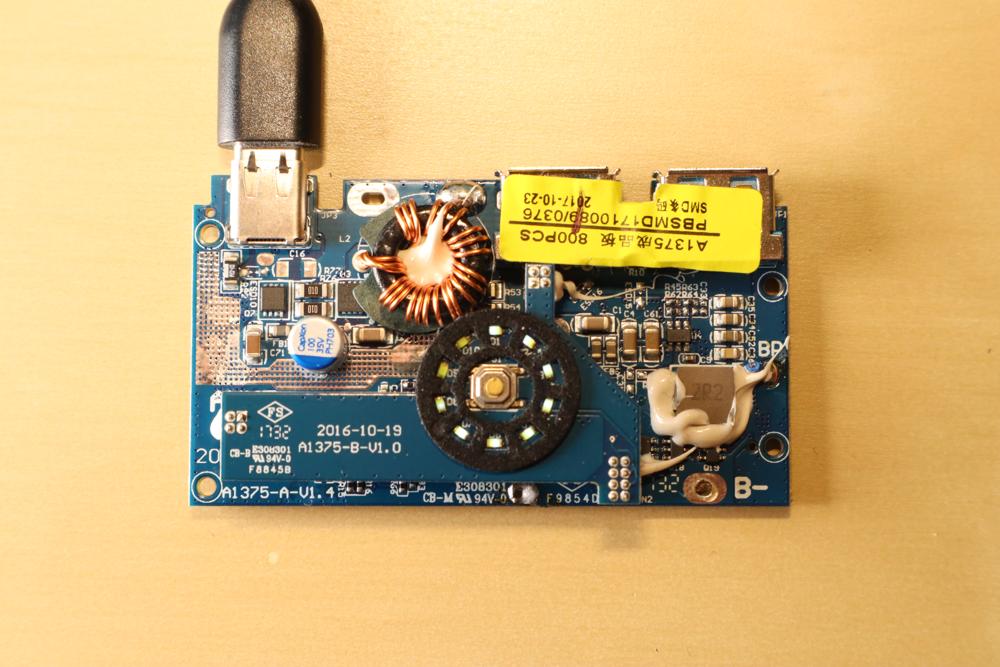

Teardown

The connector side of the power bank features a glued-on plastic cover on top of a screwed-on plastic cover.

The glued-on cover can be pried open with moderate effort by placing a suitable object between the two plastic covers, revealing the screwed-on second plastic cover.

With the powerbank placed on a table (not held in your hands), you can now take a PH00 screwdriver to release the three screws that hold it in place. Once that is done, simply lift the case up, revealing the actual circuitry (LiIon cell and PCB). If you were holding the powerbank in your hands while removing the screws, the circuitry likely fell out instead.

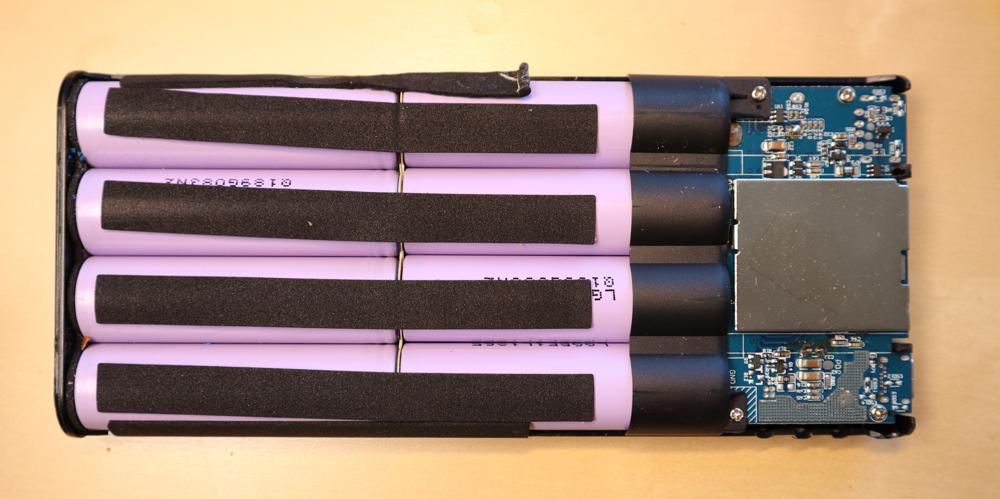

The innards are quite simple: A single 26650 LiIon cell; a single PCB; and a two-part plastic assembly featuring a button and LED diffusors.

Repair

In my case, the culprit was a loose solder connection between the USB-A output port and the PCB. Nudging the capacitor out of the way and carefully applying some additional solder to its GND and VCC pins solved the issue. Just be wary of the fact that you're operating a soldering iron next to a LiIon cell that does not like triple-digit temperatures…

Re-Assembly

- Slide the button assembly and diffusor back into the case until it locks into place.

- Slide the LiIon cell and PCB assembly back into the case.

- Place the powerbank on its bottom (i.e., so that the USB ports face up).

- Place the plastic cover on top of the PCB assembly and tighten its three screws.

- Put the glued-on top cover back on. It comes with alignment pins; in my case I did not have to apply new glue.

Caffeinated Chocolate

For the past ten years, I have been making caffeinated chocolate in order to always have a source of caffeine with me that i can consume in a pinch.

The recipe is relatively simple, but I never got around to writing it down outside of ephemeral microblog posts. So, here it is: Koffeinschoki.

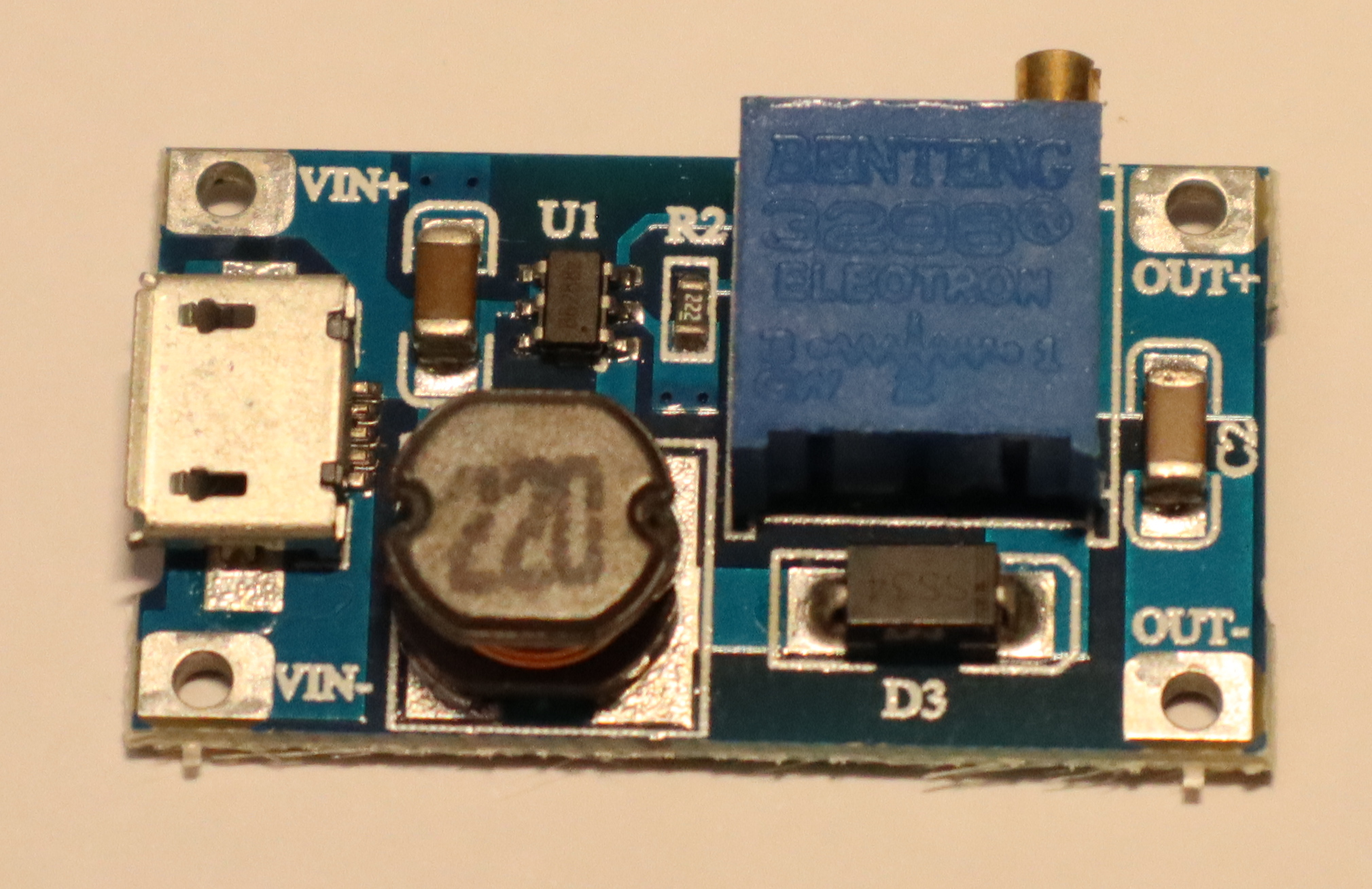

Benchmarking an AliExpress MT3608 Boost Converter

Over the past years, I have obtained a variety of buck and boost converters, mostly from AliExpress and ebay. Now that I finally have a way of characterizing them, I am curious about their performance in practice.

Today's specimen is an MT3608-based step up / boost converter from AliExpress. It's sold with a microUSB input, so boosting 5V from USB to 9V or 12V is a likely application. However, given its low quiescent current, it also seems like a good candidate for boosting ~3.7V from a LiIon battery to 5V for USB.

Specs

Advertised ratings vary. The following conservative estimates might be close to reality.

- Input range: 3V .. 24 V

- Output range: 5V .. 28V

- Maximum input current: 0.8 A (1 A?) continuous, 2 A burst.

- Quiescent current: ~100µA

- Maybe: thermal overload protection

- Maybe: internal 4 A over-current limit

The boost modules I have use a multi-turn potentiometer to configure output voltage.

Application

In this setup, I focused on boosting LiIon voltage to 5V for USB output. LiIon voltage typically ranges from 3.0 to 4.2 V -- I went up to 4.5 V just to gather some more data.

Caution

I took reasonable care to calibrate my readings, but will not give any guarantees. The following results might not be close to reality, and might be affected by knock-off chips and sub-par circuit design.

Output Voltage Stability

Both input voltage and output power of a boost converter can vary over time, especially when powered via a LiIon battery. Its output voltage should remain constant in all cases, or only sag a little under load. Most importantly, it should never exceed its idle output voltage – otherwise, connected devices may break.

Up to about 400 mA output current, the converter is well-behaved. Beyond that (i.e., once its input current exceeds 800mA), its output voltage is all over the place – both below and above the set point. With an observed range of 4.5 to 5.7 V, it is also way outside the USB specification, which states that devices must accept 4.5 to 5.2 V.

So, I'd strongly advise against using this chip to power USB devices that may draw more than a few hundred mA.

At 9V and 12V output, I did not notice issues at up to 400 mA, but did not measure anything beyond that. Also, the measurement setup for these two benchmarks was a bit less accurate.

Efficiency

In low-power operation (no more than a few hundred mA), the converter is quite efficient. Beyond that (i.e., in the unstable output voltage area) its efficiency varies as well.

Further Observations

Once input current exceeds 800mA, the devices I have here emit a relatively loud, high-frequency noise.

TL;DR

Exercise caution to avoid frying USB devices.

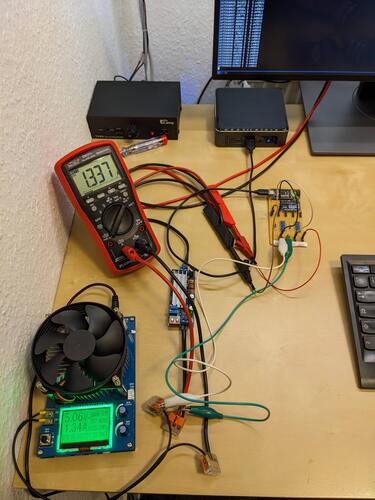

Building a test setup for benchmarking buck/boost converters

I have a growing collection of mostly cheap buck/boost converters and am kinda curious about their efficiency and output voltage stability.

Measuring that typically entails varying input voltage and output current while logging input voltage (V_i), input current (I_i), output voltage (V_o), and output current (I_o).

For each reading, efficiency is then defined as (V_o · I_o) / (V_i · I_i) · 100%.

+-------------+

| |

| Input |

| |

+---+-----+---+

| |

| +-+

| I_i

| +-+

+-V_i-+

| |

+---+-----+---+

| |

| Converter |

| under |

| Test |

| |

+---+-----+---+

| |

+-V_o-+

| +-+

| I_o

| +-+

| |

+---+-----+---+

| |

| Output |

| |

+-------------+

The professional method of obtaining these values would probably involve a Source/Measure Unit (SMU) with 4-wire sensing and remote control to automatically vary input voltage / output current while logging voltage and current readings to a database. I do not have such a device here -- first, they tend to cost €€€€ or even €€€€€, and second, many of those are more at home in the single-digit Watt range. I do, however, have a lab PSU with remote control and access to output voltage and current readings, a cheap electronic load (without remote control), an ADS1115 16-Bit ADC for voltage measurements, and an ATMega328 for data logging. This allows me to manually set a constant output current I_o and then automatically vary the input voltage while logging V_i, I_i, and V_o.

There is just one catch: The ADS1115 cannot measure voltages that exceed its input voltage (VCC, in this case 5V provided via USB). A voltage divider solves this, at the cost of causing a small current to flow through the divider rather than the buck/boost converter under test. In my case, I only had 10kΩ 1% resistors at hand, and used them to build an 8:1 divider for both differential ADS1115 input channels. This way, I can measure up to 40V, with up to 500µA flowing through the voltage divider. Compared to the 100 mA to several Amperes I intend to use this setup with, that is negligible.

V_i + ----+ VCC GND VCC GND

70k | | | |

+--+ +------------+ | | +-----------+ | |

10k +-+A0 +--+ | | +-+ |

V_i - ----+-+ | | | | | |

+--+A1 +-----+ | +----+

V_o + ----+ | ADS1115 | | ATMega328 |

70k+--+A2 SCL+-------+SCL TX+--------to USB-Serial converter

+-+ | | | |

10k +-+A3 SDA+-------+SDA |

V_o - ----+--+ +------------+ +-----------+

Of course, this whole contraption is far from certifiably accurate or ppm-safe, and even less so when looking at the real-world setup on my desk.

I did however find it to be accurate within ±10mV after some calibration, so it is sufficient to determine whether a converter is in the 80% or 90% efficiency neighbourhood and whether it actually outputs the configured 5.2V or decides to go up to 5.5V under certain load conditions. Luckily, that is all I need.

The bottom line here is: If you have sufficiently simple / low-accuracy requirements, cheap components that may already be lying around in some forgotten project drawer can be quite useful. Also, I really like how easy working with the ADS1115 chip is :)

Logging HomeAssistant data to InfluxDB

I'm running a Home Assistant instance at home to have a nice graphical sensor overview and home control interface for the various more or less DIY-ish devices I use. Since I like to monitor the hell out of everything, I also operate an InfluxDB for longer-term storage and fancy plots of sensor readings.

Most of my ESP8266 and Raspberry Pi-based DIY sensors report both to MQTT (→ Home Asisstant) and InfluxDB. For Zigbee devices I'm using a small script that parses MQTT messages (intended for Zigbee2MQTT ↔ Home Assistant integration) and passes them on to InfluxDB. However, there are also devices that are neither DIY nor using Zigbee, such as the storage and battery readings logged by the Home Asisstant app on my smartphone.

Luckily, Home Assistant has a Rest API that can be used to query device states (including sensor readings) with token-based authentication. So, all a Home Assistant to InfluxDB gateway needs to is query the REST API periodically and write the state of all relevant sensors to InfluxDB. For binary sensors (e.g. switch states), this is really all there is to it.

For numeric sensors (e.g. battery charge), especially with an irregular update schedule, the script should take its last update into account. This way, InfluxDB can properly interpolate between data points, producing (IMHO) much prettier graphs than Home Assistant does. If you also want to extend your Home Assistant setup with InfluxDB, c hass to influxdb may be a helpful starting point.

PowerCore+ 26800 Teardown

After nearly five years of service, my PowerCore+ 26800 power bank broke down recently. After a few weeks with an only intermittently working USB-C output, it stopped providing power altogether – and also stopped accepting power to recharge the battery pack, providing a distinct smell of magic smoke and an internal short circuit instead.

As the power bank is out of warranty anyways, this is a good opportunity for a happy little autopsy.

Caution: This powerbank contains nearly 100 Wh worth of LiIon cells. In normal operation, the (dis)charge PCB is in charge of battery management tasks like short circuit prevention. Disassembling the device exposes raw LiIon cells, which typically do not contain separate protection circuitry. Puncturing, shorting, or otherwise mishandling one of those can lead to fire. Don't disassemble a powerbank unless you know what you are doing.

Case Teardown

The top and bottom plastic covers are glued on and can be pried open with moderate effort, revealing four screws each.

After removing the screws and a second (also glued-on) top cover, you can push onto the connector board (top) to coax the cell and PCB assembly out of the case. A good spot for application of force is the plastic surface next to the USB-C port. It's a tight fit, so the assembly won't slide out by itself. Pushing from the bottom won't work.

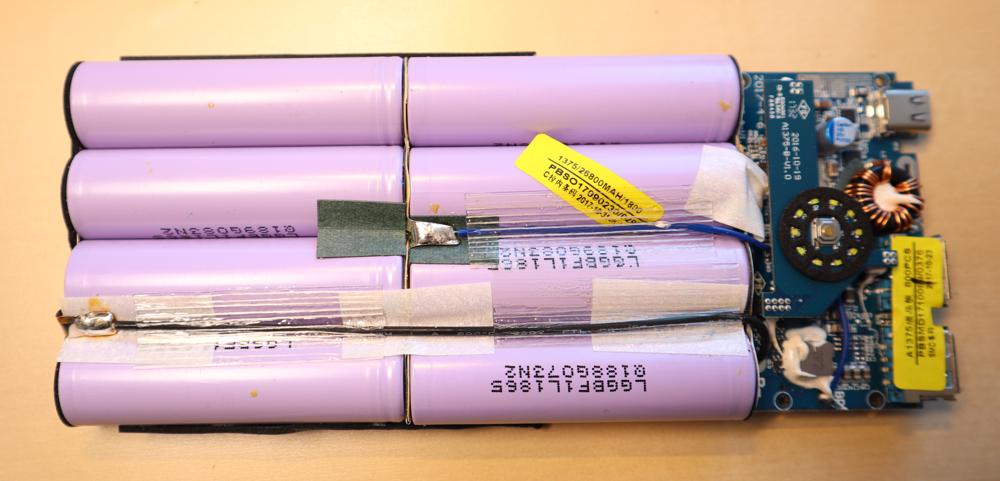

The LiIon cell layout is 2S4P with balancing. The cells are labeled “LGGBF1L1865”, which appears to correspond to LG's INR18650F1L model.

Each cell is rated as follows:

- Nominal capacity: 3.3 Ah at 3.63 V (12 Wh)

- Charge current: 0.3C (975 mA) nominal, 0.5C (1625 mA) maximum, 4.2 V / 50 mA cut-off

- Discharge current: 0.2C (650 mA) nominal, up to 1.5C (4875 mA) maximum, 2.5V cut-off

For the 2S4P pack, this gives:

- Nominal capacity: 13.2 Ah at 7.26 V (96 Wh)

- Charge current: 0.3C (3.9 A) nominal, 0.5C (6.5 A) maximum, 200mA cut-off

- Discharge current: 0.2C (2.6 A) nominal, up to 1.5C (19.5 A) maximum, 5.0V cut-off

For comparison, the powerbank's product specifications state:

- Capacity: 26.8 Ah at 3.6V (96 Wh)

- Input: Up to 27 W (9 V, 3 A) → Charging probably uses less than 0.3C

- Output: Up to 25 W (20 V, 1.25 A) via USB-C + about 20 W (5 V, 2 A) via USB-A → Discharge current is up to 0.5C (6.6 A)

My mostly discharged cells read 3.18 and 3.20V, respectively, so the cell management chip seems to be operating in a rather conservative voltage range. This should be good for longevity.

PCB Teardown

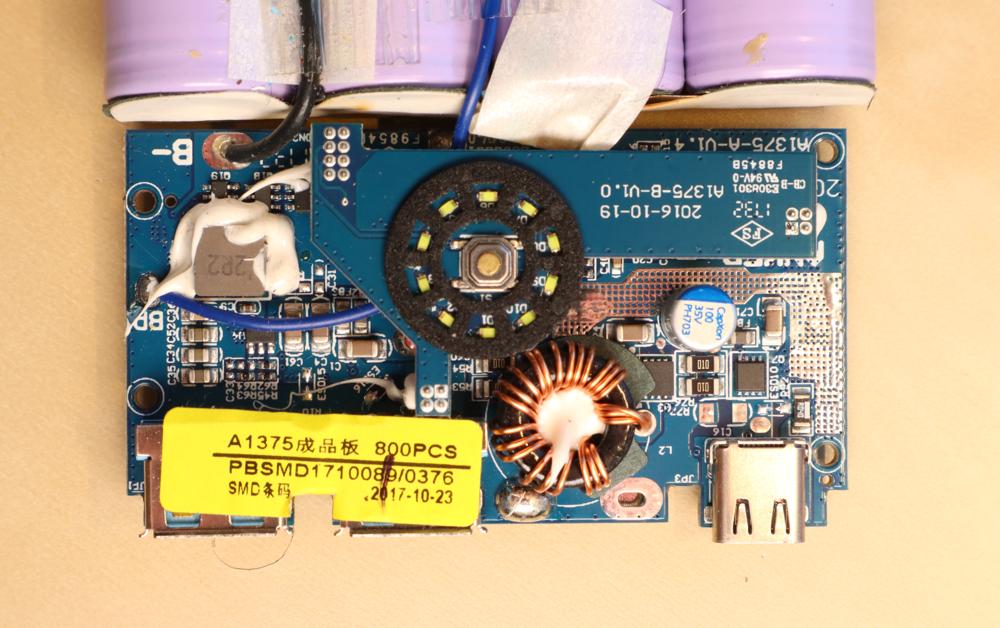

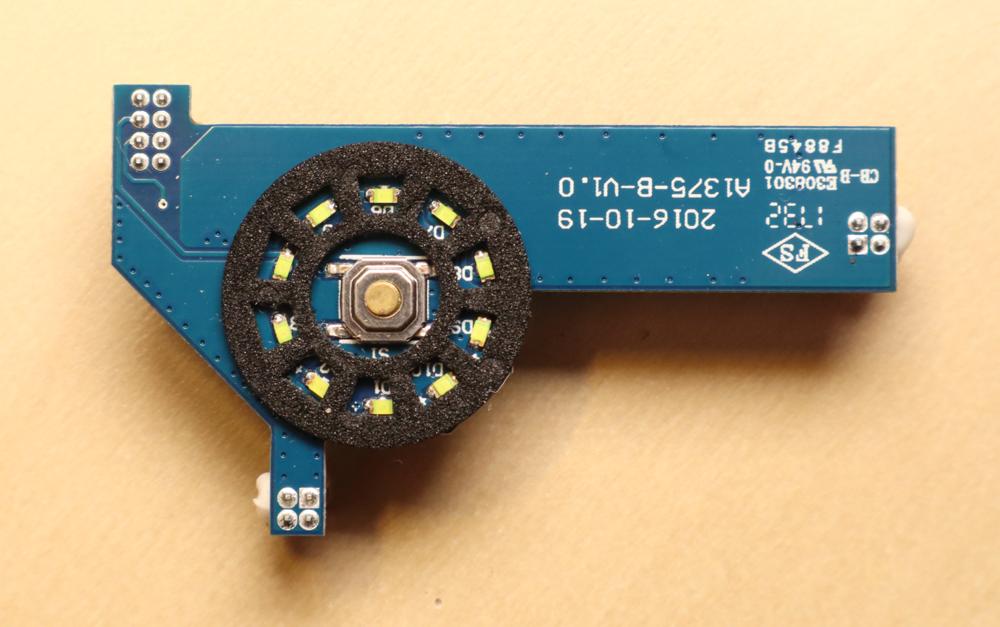

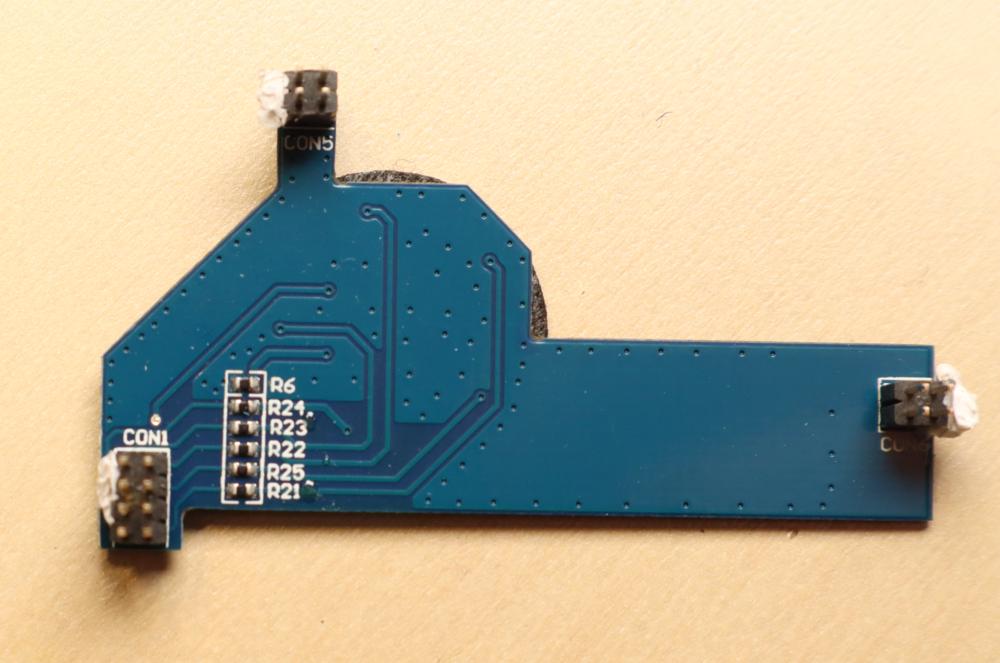

The top PCB is only responsible for LED output and button input.

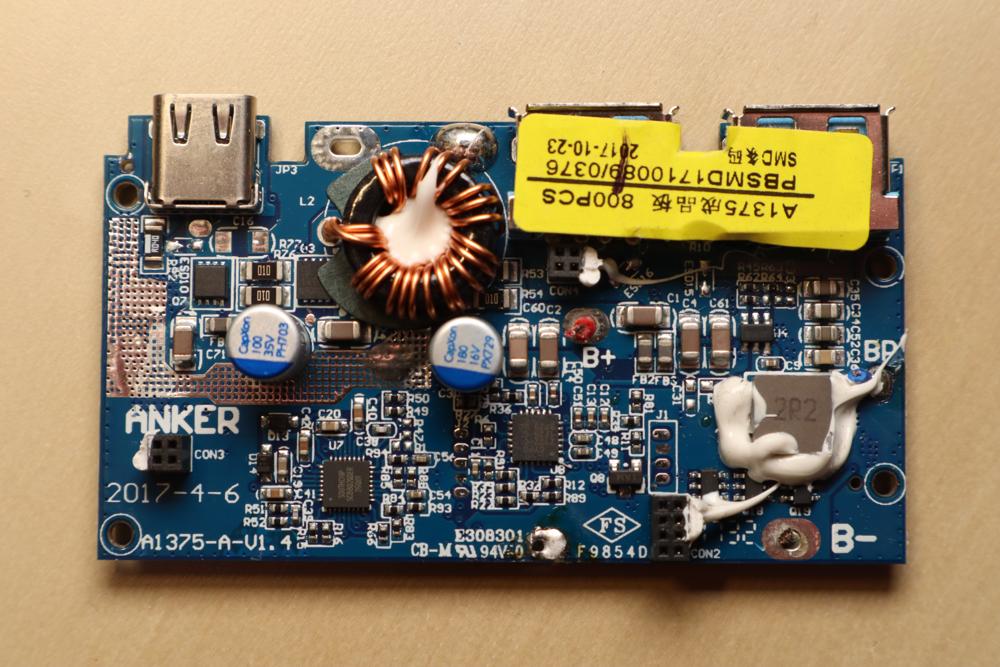

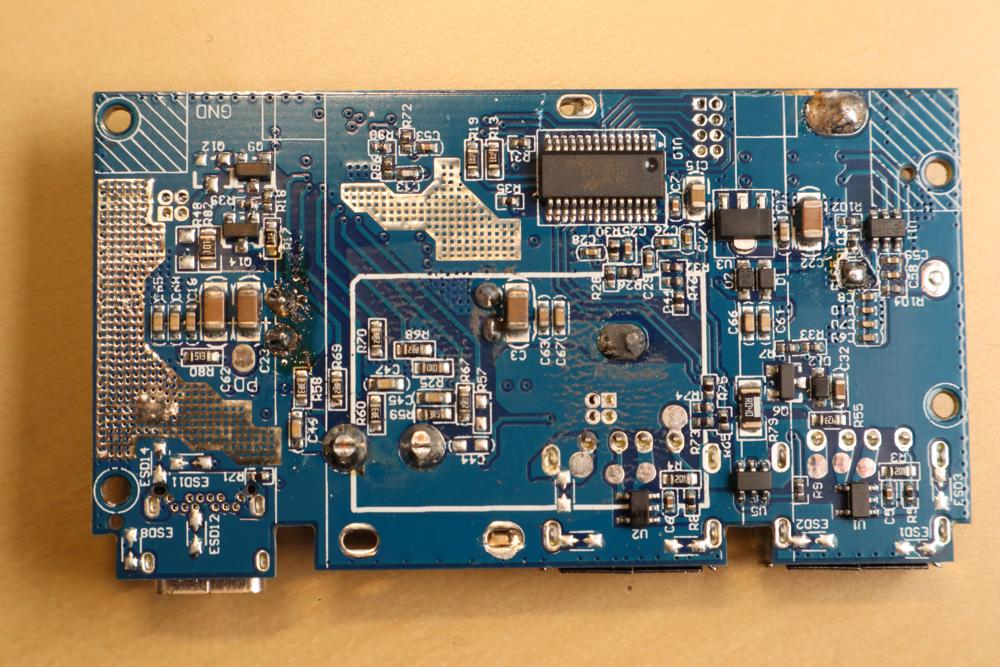

The bottom PCB includes an SC8802 synchronous, bi-directional, 4-switch buck-boost charger controller and a HT66F319 microcontroller.

The Culprit

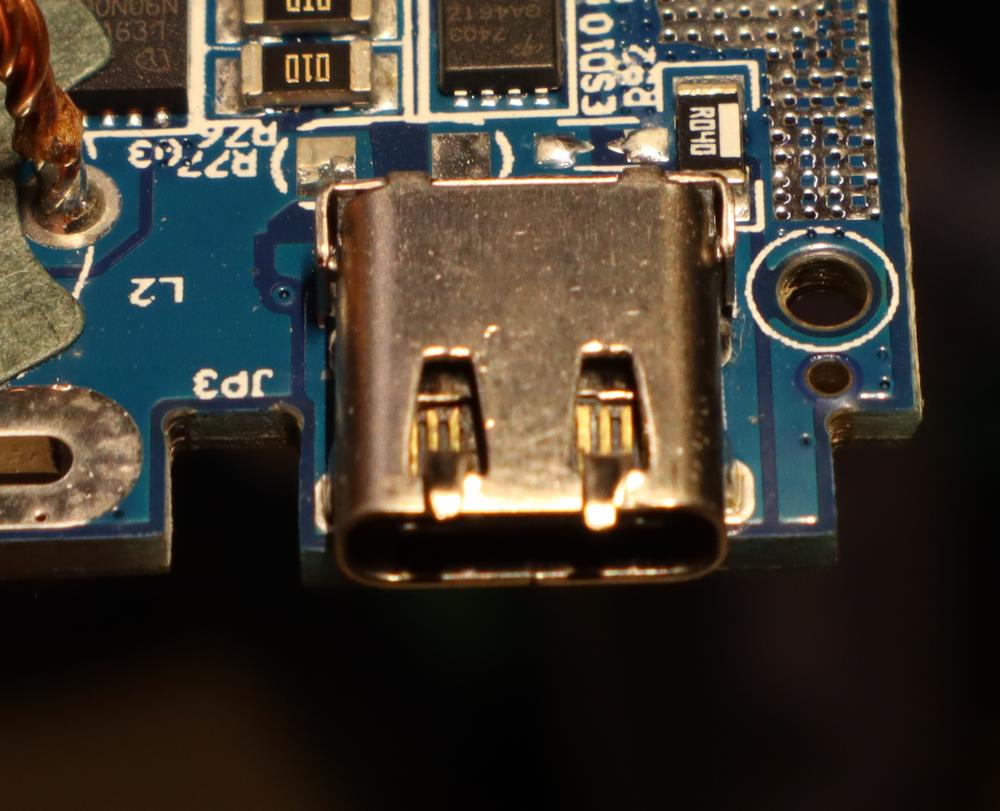

Even with disconnected batteries, there's a two ohm short circuit between USB-C VCC and USB-C GND. The culprit turned out to be the USB-C plug itself.

USB-C plugs contain a tiny PCB with contacts on both sides that the cable slides onto. In this case, the lower (recessed, non-contact) part of the PCB is embedded into a metal piece for stability. Over time, the metal piece had moved towards the contacts, eventually causing an electrical connection and thus a short circuit. After moving it back, the power bank is working again. I don't trust the USB-C port anymore, though.

All Posts

- A few notes on shell variables

- An own package system

- Attaching custom hardware to the parallel port

- Automatically connecting external monitors (udev+xrandr)

- Automatischer Jabberstatus mit zsh und screen

- Avoiding accidental bricks of MSP430FR launchpads

- Backups and Monitoring

- Benchmarking an AliExpress MT3608 Boost Converter

- Bootzeit verkürzen

- Building a test setup for benchmarking buck/boost converters

- Building Python3 Bindings for libsigrok

- Koffeinhaltige Schokolade

- Caffeinated Chocolate

- Check if a filesystem is mounted readonly

- Code execution hole in feh --wget-timestamp

- Lokalisierung fahrender Züge per GPS

- Debian Lenny

- Installing Debian on a ThinkPad T14s Gen 6 with Intel CPU

- Debian Installer Preseeding for USB sticks (with UEFI)

- Debugging ESP8266 Boot Failures

- Drogen

- EFA-APIs mit JSON nutzen

- Stop IDs in EFA APIs

- Enabling Filesystem Quotas on Debian

- EOS M50 Eyecup / Viewfinder Repair

- Using Deep Sleep on ESP8266 NodeMCU Lua Firmware

- feh v1.13 released

- Checking Maildirs on the Commandline

- Handling USB-serial connection issues on some ESP8266 dev boards

- Fuckup

- Gedanken zur Spackeria

- Gesammelte Logfiles per IRC

- Ghetto Widgets: Putting PNGs onto your wallpaper

- hashl: Hash your files, copy only new stuff

- Hiking the Jizera Mountains (Góry Izerskie / Jizerské hory / Isergebirge)

- Logging HomeAssistant data to InfluxDB

- Wechsel zu ikiwiki

- Introducing check_websites

- Kaputte Symlinks löschen mit zsh

- Krkonoše day 1: Sněžka (snow top) to Soví sedlo (owl pass)

- Krkonoše day 2: Słonecznik: Wetlands, Stones, and Ponds

- Krkonoše day 3: Malá Úpa and Tabule

- Krkonoše day 4: Train trip to Pramen Labe and hike back across the ridge

- LED-Lampen als Ersatz für Halogenbirnen

- Locationfoo

- Using cryptsetup / LUKS2 on SSHFS images

- HTTPS and custom CA certificates with LWP::UserAgent

- Lyrics auf Knopfdruck [aka: Linux ist toll]

- Measurement Automation with Korad Bench Supplies

- Allowing both LDAP login and local account creation on MediaWiki 1.35

- Einstieg in Mikrocontroller-Programmierung

- My coding principle

- Playing Neverball with the Wii Balance Board

- Noch mehr Home-Management-foobar

- Nochmal was zum Paketsystem

- Packaging Perl Modules for Debian in Docker

- Paketsystem #2

- On-demand playback on a remote pulseaudio sink

- PowerCore+ 26800 Teardown

- PowerCore 5000 Teardown and Repair

- Raumtemperaturverlauf ohne Thermometer

- Reading Power Values on a Banana Pi

- Reverse-Tabbing für Nicht-Fische

- Running Linux on a Readonly Root Filesystem

- Running remote nagios checks with an SSH forcecommand

- SanDisk "Sansa Fuze" with Linux

- Semantic Mediawiki Examples

- Logging Steam Deck Hardware Stats to InfluxDB

- Thoughts on embedded environmental sensors

- Monitoring The Things Indoor Gateway (TTIG) via InfluxDB

- Ungelesene Jabber-Nachrichten anzeigen (mcabber + screen/irgendwas)

- Upgrading my Home Assistant / Home Automation Hypervisor to Debian 13 (Trixie)

- USB → DMX with a single UART (e.g. on an Arduino Nano)

- Hiking vacation in the Giant Mountains (Krkonoše / Karkonosze): arrival, Karpacz itself, and departure

- Adding MQTT to Vindriktning particle sensors using an ESP8266 with NodeMCU firmware

- Website stuff

- zsh magic

- "Zwischenrufe" in irssi